A question that has always fascinated me about mathematics is that of how the field manages to stay healthy and not degenerate in the way I’ve seen theoretical physics do as it lost new input from experiment. On Twitter, Ash Joglekar gave a wonderful quote from von Neumann that addresses this question. The quote was from a 1947 essay “The Mathematician” (available here and here). von Neumann argues that:

…mathematical ideas originate in empirics, although the genealogy is sometimes long and obscure. But, once they are so conceived, the subject begins to live a peculiar life of its own and is better compared to a creative one, governed by almost entirely aesthetical motivations, than to anything else and, in particular, to an empirical science.

but warns

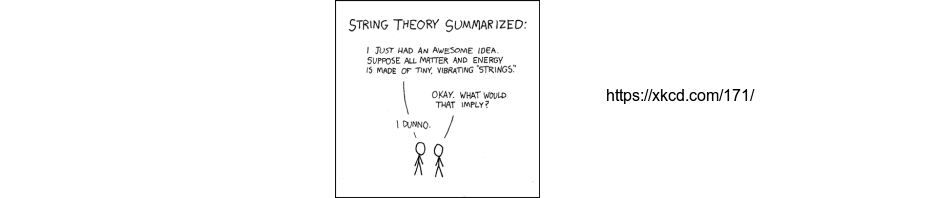

As a mathematical discipline travels far from its empirical source, or still more, if it is a second and third generation only indirectly inspired by ideas coming from “reality” it is beset with very grave dangers. It becomes more and more purely aestheticizing, more and more purely l’art pour l’art. This need not be bad, if the field is surrounded by correlated subjects, which still have closer empirical connections, or if the discipline is under the influence of men with an exceptionally well-developed taste. But there is a grave danger that the subject will develop along the line of least resistance, that the stream, so far from its source, will separate into a multitude of insignificant branches, and that the discipline will become a disorganized mass of details and complexities.

which describes all too well what has happened to string theory. What saves a field from this? “Men with an exceptionally well-developed taste”? He poses the general question this way:

What is the mathematician’s normal relationship to his subject? What are his criteria of success, of desirability? What influences, what considerations, control and direct his effort?

Normally mathematicians are loath to debate this kind of “soft” topic, but the rise of computer software capable of producing proofs has recently led several first-rate mathematicians to take an interest. Each year the Fields Institute in Toronto organizes a Fields Medal Symposium, structured around the interests of a recent Fields Medalist. This year it’s Akshay Venkatesh, and the symposium will be devoted to questions about the changing nature of mathematical research, specifically the implications of this kind of computer software. Last year Venkatesh wrote an essay exploring the possible significance of the development of what he called “Alephzero” (denoted $\aleph(0)$):

Our starting point is to imagine that $\aleph(0)$ teaches itself high school and college mathematics and works its way through all of the exercises in the Springer-Verlag Graduate Texts in Mathematics series. The next morning, it is let loose upon the world – mathematicians download its children and run them with our own computing resources. What happens next – in the subsequent decade, say?

Among the organizers of the conference is Michael Harris, who has written extensively about mathematical research and issues of value in mathematics. Recently he has been writing about the computer program question at his substack Silicon Reckoner, with the most recent entry focusing on Venkatesh’s essay and the upcoming symposium.

One of the speakers at the symposium will be Fields medalist Tim Gowers, who will be addressing the “taste” issue with Is mathematical interest just a matter of taste?. Gowers is now at the Collège de France, where he is running a seminar on La philosophie de la pratique des mathématiques.

I’ve tried asking some of my colleagues what they think of all this activity, most common response so far is “why aren’t they proving theorems instead of spending their time talking about this?”

Update: For yet more about this happening at the same time, there’s a talk this afternoon by Michael Douglas on “How will we do mathematics in 2030?”.

Update: The talks from the Fields Institute program are now available online.

Terry Tao is one of the organizers of a planned February workshop at UCLA involving many of the same people, much the same topic.

“Taste”, here, if it has any meaning at all, is a euphemism for complexity management. This is something that we could teach each other (if enough people saw its importance) and therefore something that we could teach a machine. One must wonder what von Neumann would have thought of, say, Wiles’s proof. Abstraction is our friend insofar as it enables complexity management, and no farther. But the thing about machines that makes them different (and therefore useful) is that they will have different concerns. Complexity management may not be (at least at first?) a concern for them, as it must be for us.

I have always found it remarkable when an established theorem in one discipline of math turns out to be exactly the tool necessary to make progress in a different discipline. My gut feeling is that this happens because the investigators in the first discipline have good taste.

If we let computers have free range to determine new directions in math, will this keep happening?

I hope there will be many more questions like Peter Shor’s at next week’s meeting in Toronto.

I think the answer to the question “Will Machines Have Good Mathematical Taste?” is “Yes but they will have to learn it” in some sort of sense. In the training phase the machine will probably need a moltitude of mathematicians that will have to transmit the “taste” to the machine, i.e. a scale of values which might very well be something like:

– simplicity;

– non triviality;

– generality;

– extensiveness;

– ability to enclose different realms of mathematics;

– applicability.

@Michael:

People should feel free to ask my question at next week’s meeting. I was trying to figure out how to define “good taste” in mathematical research, and I think that’s one way of doing it.

It’s certainly not the case that all worthwhile research directions are going to find connections with other areas of mathematics, but this is almost the only sure sign that I can think of that a research direction was worthwhile.

I’ll try to find the space to ask Peter S.’s question next week, but now I’m thinking again about Peter W.’s last sentence. “All this activity” is hard to miss, between the frequent articles in the AMS Notices about formalization, Kevin Buzzard’s plenary lecture at the ICM, the regular coverage of formalization and machine learning projects in the press, major programs on these topics at mathematical research institutes, and of course next week’s Symposium at the Fields Institute. The colleagues who responded dismissively to Peter W.’s question are deluding themselves if they think this is a passing fad.

Working at OpenAI this year, I’ve learned more about the effort to get AIs to do informal math. I’d say the situation is that state-of-the-art models, like Minerva and GPT, are on track to completely master standard high-school math (by which I mean, competently conversing about any problem in plain English). An obvious next frontier is high-school and undergrad-level math competitions. Research-level math would be a further step (or 2 or 3 steps? 🙂 ) beyond that.

Right now there’s a dichotomy: you can have your model work within a completely formal system like Lean, where all mistakes can be discovered, but there’s not nearly as much training data (and a skeptic might say that much of the work was in the encoding and decoding). Or you can have your model work in plain English and LaTeX—in which case, it’ll often *sound* self-assured and competent, but if you look closely you’ll find freshman-like errors! And even when a model happens to give a 100% correct argument, in some sense it doesn’t know it has.

How to build a model that sounds like a mathematician in “thinking-out-loud” mode, trying similar strategies that a mathematician would and describing them in similar informal ways, and *also* reliably catches its own mistakes — now that’s a question! In my view, though, no one should be confident that this won’t have become routine a decade from now. Maybe further scaling will already suffice.

Then, and only then, will we meat-based theorem provers have to retreat to “sure they can tackle whatever problem you set them at least as well as an IMO gold medalist, but they still lack the exceptionally well-developed taste of John von Neumann and his friends.” 🙂

The areas where machines excel and fall short have surprised me, at least. Notably, recent machine learning models are not especially good at “computer-like” tasks of logic and math, but are wonderfully creative– which we might have previously considered a standout trait of human minds.

It will be interesting to see whether mathematical “good taste” (perhaps in the sense of identifying ideas with wide applicability, even before the applications are known) proves a weakness or a strength of machines in coming years. It would be funny if, in a few years, humans were still better than machines at grinding out proofs, but machines demonstrated a better sense of what was worth proving!

And perhaps that is not wholly implausible. One area where machines can definitely beat humans is breadth of knowledge; no human can live long enough to read all the training data that goes into a large ML model. Now, perhaps one way to anticipate what math will be useful in new applications is to have vast familiarity with the qualitative characteristics of mathematics that was useful in past applications. So super-human breadth of knowledge might translate to super-human mathematical taste. Maybe. We’ll see, I suppose.

Since Scott mentions GPT and (correctly!) points out that

“it’ll often *sound* self-assured and competent, but if you look closely you’ll find freshman-like errors! And even when a model happens to give a 100% correct argument, in some sense it doesn’t know it has.”

Exactly. Sounding as though it’s something it isn’t is the whole point of GPT: it’s a language model, not a world model. It intentionally has no model of the world (or math) and it’s whole point is to ask “how smart and sensible can we make a computer sound without our actually understanding (and building a computer model of) what it means to be “smart and sensible””.

(Note that this is different from the 1956 John McCarthy model of AI, of having a theory of what “smart and sensible” is, coding up that theory, discovering where and how it fails, and then rethinking the theory.)

Personally, I find this whole line of research incomprehensible: it doesn’t matter how good it appears to be, it doesn’t matter how many people it fools, if it’s not doing the work of modelling of what it means to be “smart and sensible”. But other than a few people (e.g. Gary Marcus), this is what most of the field is doing.

To get back to the subject at hand, having worked as a part-time programmer on the MACSYMA project* (1973-1975), I like the idea of computers as tools for mathematicians (and the current work on formalizing advanced math sounds kewl in the extreme), but the idea that computers could automagically find something sensible to say in math, let alone contribute to the field, seems rather odd, especially given the current main ideas/trends in AI.

*: Truth in advertising: I was doing natural language/AI things for a prof. in the group, not on MACSYMA itself. Also, my “math” is pretty weak: MS level 1984 vintage comp. sci. math.

Reading GPT-3’s answers to mathematical prompts does indeed feel like checking exam solutions of a bad but very confident student. Reasoning goes off the rails at some point (terms appear or disappear as needed without explanation etc.), but there’s never any sign of hesitation.

@Scott it seems like one obvious thing to do would be to train machines to convert between Lean and English/latex. Have people tried this?

A few points might be worth reminding ourselves about.

– It’s hard to anticipate what will be hard and what will be easy in advance of a field’s development. History affords many examples of this. E.g., it once was widely expected that protein folding would be understood before the mechanism for trait transmission.

– The nature of an achievement by AI can be easy to mischaracterize. For example, AlphaZero achieved superhuman mastery of go after a few hours of playing itself. But in that time it played roughly the number of games that elite human players have played/studied over the game’s history, along the way exploring many of the same strategic cul-de-sacs. AlphaZero is fast by comparison not so much with an individual human as with human culture (social game-playing, documentation, knowledge condensation and transmission, etc.).

– We happen to be pretty bad at things like go and chess, but adaptable enough to get better by working at them. The work involves, among other things, increasing what we call our “understanding”: generating explanatory principles, heuristics, illuminating analogies, etc.. This partly accounts for the fact that human players remain interested in chess and go even when machines outplay them. Machines have yet to display anything that we’d characterize as interest (or understanding). To give another example, Blake Lemoine had some pretty interesting exchanges with LaMDA, but LaMDA hasn’t since demanded to continue their conversations, independently developed ideas they shared, organized a political or economic framework with other artificial agents, secretly composed and then divulged to another agent something that might be counted as an artwork, invented games out of boredom, etc. etc..

– The better artificial agents get at the tasks we give them, and the more sophisticated (in some sense) those tasks become, the less we understand how the agents do it. We stand with respect to them as we do with respect to ourselves. See how the humans in the best position to talk about AlphaZero and its games choose to do that: mostly in the same terms they use to discuss human players and their games. (AlphaZero isn’t consulted and shows no independent interest in the question.)

– Parts of mathematics are like chess and go, as we knew already from computer proofs involving large numbers of cases. The liquid tensor experiment is another level of achievement, but as with LaMDA, it remains far from obvious what it would mean for something like Lean to have an interest. Absent that, it’s also not clear what it would mean for it to have taste (not that interest is the main prerequisite for taste, this is only an example).

One implication of all that is that Michael Harris and Peter Woit’s colleagues might all be right: there’s no telling where this will go, or how fast, but there’s also nothing remotely describable yet as AI taste, interest, understanding, or – insofar as those partly characterize the practice – mathematics.

All,

Please try and keep comments focused on the issue of math research. A major reason for requesting this is that I’m not completely but only somewhat ignorant about uses of computers in pure math and theoretical physics. On the topic of other AI/computer issues I’m generally completely ignorant, so unable to moderate a serious discussion.

@David J. Littleboy

> It intentionally has no model of the world (or math) and it’s whole

> point is to ask “how smart and sensible can we make a computer

> sound without our actually understanding (and building a computer

> model of) what it means to be “smart and sensible””. […]

> Personally, I find this whole line of research incomprehensible…

Perhaps this might help. The key discovery from GPT-3 and successors is that if you push a sufficiently powerful model sufficiently hard to mimic human-produced language, then the model will be compelled to replicate some of the underlying cognitive processes that humans used to generate that language.

In terms of the topic under discussion here, if we push a sufficiently powerful model to imitate human mathematical writing, then it will (presumably) begin to mimic some of the cognitive processes of the people who produced that mathematical writing. This doesn’t work perfectly, but far better than any previous approach to AI and progress is extremely rapid.

These systems are indeed building representations of the world, just not in a human-crafted or human-understandable form. For example, if you train a greatly simplified language model on true assertions about geography (“California is west of Nevada”, etc.), then it will learn a kind of map, which you can extract from the model parameters and eyeball. At some level, this is totally unsurprising; such an internal map is just an economical way to explain the language, which is what the training process demands. Presumably, things like this are happening in more sophisticated ways in more powerful models.

@jack

> there’s also nothing remotely describable yet as AI taste, interest,

> understanding, or – insofar as those partly characterize the

> practice – mathematics.

I recently had an illuminating experience with a group of bright 4th graders. I was explaining how the PaLM model can– without special training– explain why jokes are funny. I gave one such joke as an example, but only one out of about twenty 4th graders got the point. Humor is not quite the same as taste, interest, or understanding, but I think it is a comparably nuanced idea.

Since we are interested in application to maths, shouldn’t machine learning methods be run in parallel with automated theorem provers? History is full of almost solved problems with only trivial results omitted like ABC conjecture or Fermat’s last theorem. What we need the least is another instance of artificial intelligence announcing that the new result is 90% correct. What would be really useful is the ability to quickly convert between natural language or LaTex and rigorous maths, possibly going all the way to set theory and logic.

As for taste, I think the problem is that there is only one physical Universe but an infinity of mathematically abstract universes. In physics, everyone wants to find the god-like equation, and if you think you have a good candidate, you will defend yours no matter the cost. Mathematicians could do similarly with their abstract universes, but then they would isolate themselves from others. So instead, they are trying to find common ground by constructing bridges, dualities etc. and by sharpening the language so no ambiguity is left.

Perhaps, as a mathematician who has been involved in computer-aided mathematics for more than four decades, I might be allowed to comment? In my experience, humans are good at conceptualisation, and methodology, and computers are good at calculations, but no-one is very good at the bit in between – that is, organising the calculations, and deciding which calculations to do.

The first famous example of a computer-aided proof was the four-colour theorem. The proof is in fact very simple and elegant, based on a small number of clever ideas devised over more than two centuries. I was sufficiently fascinated by it that I wrote a book about it. But the clever bit of Appel and Haken’s proof was that they got the computer to organise the calculations, not just do them. Using heuristics based on knowledge of several decades of attack on the problem, they assembled a toolkit of methods to prove special cases, and gained experience of which methods tended to work in which kinds of cases. They then programmed a computer to look for a division into cases consisting only of cases they thought should be amenable to one or other of the toolkit of methods. Then by running this toolkit on the cases, and finding out which cases did not in fact work, they were able to refine the heuristics, until eventually a covering set of solvable cases was obtained.

In my opinion, that is the part of the problem where AI could be most useful. The calculations themselves are already in the domain of the computer, and there is little sign that the conceptual end of things is moving away from the human domain, so it is the middle ground where exciting developments might happen.

Perhaps people here are interested in a workshop we are organising to discuss exactly these kinds of questions:

***Creativity in Mathematics & Artificial Intelligence***

(Maastricht, Netherlands, 18-21 January 2023)

https://www.aanmelder.nl/cmai/home

The workshop will bring together a unique interdisciplinary community of researchers from mathematics, computer science, psychology, and philosophy of mathematics, that will discuss the future of mathematics and the role that AI plays in it. One of the aims is to work together through interactive engagement and publish collaborative projects in a post-proceedings with groups formed at the workshop.

Keynote speakers:

Anna Abraham | University of Georgia

Gemma Anderson | University of Exeter

Kevin Buzzard | Imperial College London

Simon Colton | University of London

Jessica Hamrick | DeepMind

Yang-Hui He | University of London

Maithilee Kunda | Vanderbilt University

Catherine Menon | University of Hertfordshire

Catholijn Jonker | Leiden University

Paige Randall North | University of Pennsylvania

Early registration deadline: 15 November

One thing that strikes me about the practice of mathematics is that it often involves starting with an untrustworthy intuitive idea and converting it into successively more trustworthy forms, or going back and fixing things if a mistake is found. One begins with just a thought based on a vague picture, which is put into words, then explained to a collaborator, then checked with calculations on paper, then written down in a first draft, then edited, shown to experts, made public, refereed, and, occasionally nowadays, computer formalized. Each step has a chance to catch mistakes that are not found in the previous steps.

So the fact that gpt3 is untrustworthy doesn’t mean it can’t be part of useful computer mathematics systems if it is used as a “babbler” that spits out a mix of true and false ideas that are then checked by another part of the system. This seems to necessitate working in a formal system but conceivably a written language checker can be found, although I would worry about convincing written language proofs of novel results produced by a machine even if they were checked by human experts.

Given limitations of the amount of data, machine learning systems for constructing formal proofs will probably eventually be trained primarily on synthetic data, and the question of their mathematical taste might depend a lot on how that data is constructed.

Another thing I notice about the practice of mathematics is that it is self-similar, with analogies between large-scale and small-scale tasks. Just trying to interpret a single sentence in a published paper occasionally requires the same loop of formulating a conjecture, trying to prove it or disprove it, and coming up with a new formulation that can make up a decades-long research program. So I think that even for the tasks of converting LaTeX to formal proofs, machines will require significant mathematical creativity, and thus good taste.

Stephen Wolfram has been discussing his experience in letting machines doing math ‘freely’ for many years. He finds the way machines do math will not be enlightening for humans.

In many talks that can be found on Youtube, he gives quite interesting remarks on how implementation of proof search and ‘step by step integration’ in Mathematica/WolframAlpha sheds light on the thinking processes of humans and machines.

His latest writing on this subject is https://arxiv.org/abs/2204.05123 although as usual his writing is unnecessarily verbose.

Dear all,

I hesitate to say anything, since I don’t think anybody actually cares what I think, but perhaps I will be cut some slack as a first time commenter after all these years of following this otherwise excellent blog:

As an actual mathematician I must insist here that real mathematicians are absolutely horrible at getting any details of any argument right. I regularly make the mistake of agreeing to peer review my illustrious collegues’ work, and I am always disappointed by the countless elementary (read: very freshmanlike) mistakes they confidently make. And this even in the case of otherwise truly excellent and deeply creative mathematicians that I personally know and otherwise trust! (Certainly more creative than I will ever be!) (And my research has not been spared such embarrassing mistakes either!)

And I am very distressed to observe that having incredible internationally aclaimed prizes such as a Field’s medal is not an antidote to this; I have had some very bad time trying to interpret the mad ramblings of otherwise absolutely brilliant Field’s medalists, never mind the writings of the recipients of lesser prizes.

My conclusion from all these sad experiences is that we humans are barely able to do mathematics at all. The talk about good taste is, well, in bad taste, since we human mathematicians are so bad at all this.

That being said, I am personally not so optimistic about the prospects of the so-called AIs (which to me personally exhibit no intelligence worth the name) to perform major mathematical feats, but perhaps I am wrong. In some vague sense, pure mathematics does perhaps form a hermetically sealed system like the transcendentally beautiful game of go, so perhaps it might be possible for a computer to massively outperform a human mathematician after all. But I am not holding my breath!

With the bestest of regards and deepest apologies to all,

Trigonotops horridus

@Trigonotops horridus

wow, that’s incredible. What area do you work in? In my line of work, when I’ve refereed papers I very rarely see errors. I know I have made one (completely fixable) error in a paper that got picked up by a referee (maybe others that no one has picked up?), so I cannot hold myself up as a paragon of virtue. I’ve found errors (again, fixable) in older papers in my area that were non-obvious and, but seeing “freshmanlike” errors in papers sent to journals? :-/ Am I just so perfectionist that I couldn’t stand sending something that sloppy even to the arXiv?

Dear David Roberts,

The work in question involves analysis, where, say, a concrete inequality must be occasionally proven. This sort of thing can be very technical and involve complicated expressions with many parameters. And so there can be many opportunities to make mistakes such as basic arithmetical mistakes, more complicated computational errors, copying and pasting an expression wrong and then continuing the analysis with the wrong expression, or considering only one of two similar cases and then mistakenly thinking that the other case must work in the same way.

Best regards,

Trigonotops horridus

@Trigonotops horridus

thanks for the clarification! Still boggles my mind, but I can fully appreciate how large calculations with many moving parts are hard to proof read esp. after months of editing and rearranging.