In this week’s Nature, Abraham Loeb, the chair of the Harvard astronomy department, has a column proposing the creation of a web-site that would act as a sort of “ratings agency”, implementing some mathematical model that would measure the health of various subfields of physics. This would provide young scientists with more objective information about what subfields are doing well and worth getting involved with, as opposed to those which are lingering on despite a lack of progress. Guess what Loeb’s main example is of the “lingering on” category?

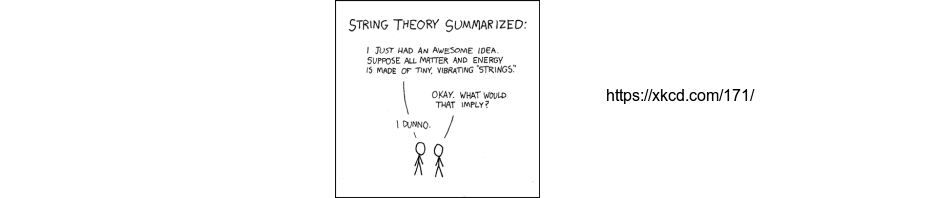

In physics, the value of a theory is measured by how well it agrees with experimental data. But how should the physics community gauge the value of an emerging theory that cannot yet be tested experimentally? With no reality check, a less than rigorous hypothesis such as string theory may linger on, even though physicists have been unable to work out its actual value in describing nature…

Theory bubbles

The study of the cosmic microwave background provides an example of how theory and data can generate opportunities for young scientists. As soon as NASA’s Cosmic Background Explorer satellite reported conclusive evidence for the cosmic microwave background temperature fluctuations across the sky in 1992, the subsequent experimental work generated many opportunities for young theorists and observers who joined this field. By contrast, a hypothesis such as string theory, which attempts to unify quantum mechanics with Albert Einstein’s general theory of relativity, has so far not been tested critically by experimental data, even over a time span equivalent to a physicist’s career.

The problem of course is that of deciding who gets to make evaluations of what’s a healthy field and what isn’t. People with a lot invested in a dying or dead subject have strong incentives to misrepresent the situation (see, for example, the famous Monty Python Dead Parrot sketch). Loeb implicitly compares the current situation with string theory to that following the financial crisis, which was worsened by the ratings agencies assigning AAA ratings to debt not far from default.

Senior scientists might seem the people best suited to rate the promise of research frontiers. But too many of these physicists are already invested in evaluating the promise of these speculative theories, implying that they could have a conflict of interest or be wishful thinkers. Having these senior scientists rate future promise would be akin to the ‘AAA’ rating that financial agencies gave to the very debt securities from which they benefited. This unseemly situation contributed to the last recession, and a long-lived bias of this type in the physics world could lead to similarly devastating consequences — such as an extended period of intellectual stagnation and a community of talented physicists investing time in research ventures unlikely to elucidate our understanding of nature — a theory ‘bubble’, to borrow from the financial world.

The problem of how to get scientists and academics to rigorously evaluate what works and what doesn’t is a difficult one. In particle physics, success has led to making progress harder to come by, so just noticing a lack of progress at the rate of earlier times is not enough. String theorists are right to point out that developing ideas to the point that the theory can be compared usefully to experiment could be a difficult goal that may take a long time to get to. They’re wrong though not to acknowledge the fact that they’re not getting closer, but rather farther and farther away. And misrepresentations about the state of a subject can victimize young students and researchers, induced to devote crucial parts of their lives to something not worthwhile.

I’m rather skeptical about Loeb’s faith in mathematical models to provide objective guidance. The AAA ratings assigned to dubious mortgate-backed securities were the product of mathematical models, defective ones. If you let me design the model, I can come up with one that will justify whatever conclusion I want. In the end, outcomes will depend on the quality of the judgment and decisions of those the community chooses as its leaders. Throughout academia, bad ideas live on, and good ones don’t get the recognition they deserve. At the same time, in many fields those put in positions of responsibility live up to them and often do a remarkable job of countering the forces promoting stagnation as well as providing a positive vision that drives real progess.

As for Loeb’s idea about a web-site where young scientists could go to get information about the health of a field, I remain skeptical about prospects for one that implements a mathematical model. However, a website devoted to honest and informed discussion about what is going on in a field and whether it is healthy, providing a place for students and others to listen to and participate in debate, helping them make up their own minds, seems to me an excellent idea…

Update: I just noticed that Loeb had a paper on the arXiv last year spelling out his proposal in more detail.