A few short items:

- No Higgs news on the LHC front, but on the BSM front today’s CERN talk Update on Searches for New Physics in CMS provides more evidence against the various exotic scenarios heavily advertised over the last twenty years. No sign of extra dimensions, SUSY, or other exotics. For more, see Resonaances. SUSY proponents now seem to be somewhere between the first stage of grief (“denial”) and the second (“anger”, see e.g. here) and on their way to the third (Bargaining – “I’ll do anything for a few more years”).

- From Sean Carroll’s Twitter feed I learned about this long description of a recent workshop bringing together philosophers and quantum field theorists. I guess my take on the “heuristic” vs. “mathematical” quantum field theory debate is that we need both, since there is still a huge amount we fundamentally don’t understand about QFT.

- For a finite number of degrees of freedom, quantum mechanics itself is, unlike QFT, rather well understood. For a nice recent review of some topics in the mathematics of quantization, see Ivan Todorov’s Quantization is a mystery.

- It’s not only string theorists producing over-hyped university press releases, there’s things like this. See more discussion here.

After browsing Todorov’s text, I was reminded how such short shrift is given to the probabilistic and statistical nature of quantum theory. The virtues of group theory, symplectic spaces are extolled by virtually every mathematical physicist, yet, quantum theory is equally an exercise in probability theory. Looking back on grad school, I am surprised how little probability and statistics I was taught.

Hey Peter, when are you going to review Lawrence Krauss’s new popular science book?

Enrico,

See

http://www.math.columbia.edu/~woit/wordpress/?p=4385#comment-103275

Peter, there continue to be talks/hype on extra dimensions.

See

http://pirsa.org/12010130/

by C. Burgess

Shantanu,

I suspect that many if not most of the people who have been promoting this kind of thing their entire professional lives will stay stuck in the denial phase forever. The attempt by Burgess (and others) to claim the LHC not seeing anything as a success for their theory is pretty amusing.

My TOE is that everything is turtles, very small ones, of size about (100 TeV)^-1. So, not visible at the LHC, thus the latest data there is a huge success for my theory.

When I read the abstract, I thought that Dr. Andrulis’ theory could very well be a hoax. Then I scanned the manuscript, and am convinced that my first impression was wrong. Nobody would go to that much trouble to be a joker, no matter how deranged. Perusing his other publications, it’s all quite solid and uncranky. I don’t know what to make of it.

OK, having read a bit more, I do know what to make of it: the guy is nuts. Certifiable. It’s monomaniacal crankery of the first water. No matter how coked up the guy might have been to hasten production, this thing must have taken many months of painstaking research, however bizarre the inferences he drew from his hundreds of references. He practically invented a new language just to convey the details of this…I don’t even know what to call it; “theory” just isn’t up to the task. It looks to be the mother of all crackpot opera, and somewhat disturbing to read.

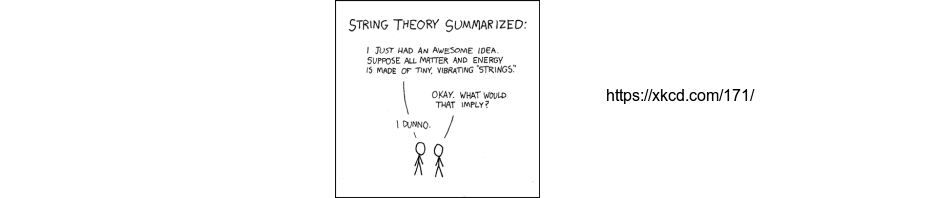

low math, etc. – Could be a crying need for a journal for TOEs short on T, where O = 0, long on E, with peer-review standards fixed by xkcd; call it Doodles Review.

Andrulis’ theory is not a theory, it is a completely useless language that tries to put everything under some kind of common ground. I have to say it: it is weird beyond imagination.

Interesting stuff . Add Gyres to Circlons – Margaret Wertheim would be proud of this stuff / guff.

Is this a harbinger of non-science becoming more difficult to combat due to a rapid veneer of internet credibility? I don’t think so. Hyped for a moment, blogs like this refute it pretty quickly.

The pace of theory generation may have increased – but the testability / prediction filter now seems even more precious.

The comments on the CMS show also how String Ideology seems more and more a zombie theory – still going, unkillable, yet lifeless.

@Enrico

I did indeed suggest this book as Peter points out. I am afraid the invocation of multiverses is used quite a bit, and though I think Lawrence Krauss a charismatic author, this preference for multiverses continues to vex me. Its a giant hand-waving answer when more grounded unresolved basics such as quantum pressure seem to have much more life in them.

To quote from the article on the ARS Technica website that Peter has a link to above:

So perhaps we will finally have an answer to the question of whether the Moon is there, or not, when nobody is looking!!

I just checked out Pharyngula, and the discussion there seems to confirm it: Dr. Andrulis is probably not well. Perhaps it’s best I retract some of my harsher statements and hope he gets the help he needs.

So, better to focus on the real issue here, then (which I think was Peter’s intent anyway): How? How in the bloody HELL did this get past Case Western’s filters, or do they have none?

>quantum theory is equally an exercise in probability theory

Along the lines of Lucien Hardy (http://arxiv.org/abs/quant-ph/0101012) and Ray Streater (http://arxiv.org/abs/math-ph/0002049) I suppose? Ray is way over my head here though.

LMMI:

How in the bloody HELL did this get past Case Western’s filters

At least in my experience, academic institutions don’t generally have filters that would prevent something like this. I’ve certainly always submitted directly to the journals, nothing was ever approved beforehand by some university level official (and a good thing too).

CWRU’s screw up was in issuing the press release, assuming they’re the one’s that issued it. If that’s what you meant, then I agree. Someone’s alarm bells should have been set off before they reached the point of publicizing this stuff.

(stupid unrelated question: why do none of the standard methods for inserting line breaks seem to be working?)

Never mind…

Yes, I meant the hyping. It is not possible to avoid overstating the importance of Dr. Andrulis’ work because, from a scientific perspective, it is utterly bereft. Anyone who reads even the abstract ought to be able to recognize that it is in the interests of all concerned to avoid public acknowledgement that it exists.

Low Math, Meekly Interacting,

As an RPI undergraduate many years ago, I recall coming across some curious little volumes among their physics books, the contents of which were quite similar to Dr. Andrulis’ writing. I’ve sampled quite a few crackpot musings on the web since. They all seem fundamentally quite similar—a certain kind of barely coherent word salad that conveys a feeling that the author imagines himself in possession of a vast and indubitable insight into the nature of reality. I’ll bet reams of this stuff get written every year, albeit rarely with the visibility granted to faculty at reputable institutions.

I guess it’s old news on the interwebs, but a few of Life’s editors, who were reportedly unaware of the paper until after publication, have resigned in disgust. Wonder if any heads will roll at Case.

Peter have you seen this?

Any comments?

http://blogs.nature.com/news/2012/01/us-physicists-call-for-underground-neutrino-facility.html

In my last comment I meant to say:

“As an RPI undergraduate many years ago, browsing the university library, I recall coming across some curious little volumes among …”

Shantanu,

Interesting to see the strong endorsement from so many theorists. I haven’t been following the LBNE story, had assumed that closing the Tevatron while keeping the US HEP budget fairly constant would free up funds for it.

Agree. however am surprised that the theorists in the letter still believe proton decay will be seen. Also I think we discussed here, non-0 neutrino mass really doesn’t

tell you much about physics beyond standard model.

Peter wrote:

Thanks for the tip, now I understand where the idea that “AQFT is a failed research program” comes from that I read about at several places in the blogosphere, it is from

*David Wallace: Taking Particle Physics Seriously: a Critique of the Algebraic Approach to Quantum Field Theory

While I could explain what Wallace gets wrong, in the spirit of the “string wars” I will instead mention that Ed Witten chose to use the framework to make some of his earlier ideas mathematically precise, see the arXiv. See?

While I agree with Wallace that philosophers of science should not limit themselves to theories that are mathematically precise (ignoring Lagrangian QFT and concentrating on AQFT, in this case), I get the impression that he (as several other theoretical physicists) actually don’t understand what “mathematically precise” means – to mathematicians: He writes that Lagrangian QFT is, in this regard, on the same footing as QM, i.e. molecular and nuclear physics etc. From his paper:

It would seem that this misunderstanding is also behind a lot of back and forth regarding the statement that “string theory is mathematically consistent”.

Hi Tim,

I agree with you (in disagreeing with Wallace) that AQFT should not be considered a failed research program, and with Peter that people interested in understanding QFT should marshal any resources available. (As an aside, I was actually at the conference in question, and that tended to be the consensus view). However, I don’t think the “failed research program” claim really originates with Wallace — I’ve heard it from physicists more than a few times, including some who I consider more concerned with rigor than most, as well as some wouldn’t be caught dead reading a paper published in a philosophy journal. So my experience suggests that Wallace’s claim is probably a symptom, rather than a cause, of the attitude you report seeing in the blogosphere.

Insofar as after more than 50 years one cannot calculate cross sections with AQFT, it is certainly a failed research program.

A bit more honesty about conventional QFT by Wallace, though, would be welcome.

QFT, as originally formulated, does not work when interactions are introduced. We therefore do not use it for this purpose. Instead our interacting theory comprises a set of invented fitting functions. Suggesting that these derive from quantum field theory is a nonsense as this would involve extracting unique finite values from the difference of two infinite ones. This is impossible, as all should know.

CU Phil said:

Of course I do not know where this claim originates, but since Wallace does not cite anyone else, we could simply stick to discuss what he himself wrote.

Of course a research program can fail only in relation to a specific goal (some people seem to use the phrase to express their dislike, like in “AQFT is a failed research program, I don’t like it because I have developed a metaphysical allergy against operator algebras”. I hope it is possible to keep that out of the discussion).

Wallace claims that the goal of AQFT was to clear up the mess with infinities in QFT and especially the need of renormalization. That may have been the motivation of some researchers, but the core motivation for the program was and is to have a mathematically precise formulation of what a QFT is. One where you can actually prove theorems. As I said before, I now doubt that Wallace even understands what that means.

Then, if the goal is to “calculate cross sections before the year 2000” then the program has failed, too. Wallace keeps pointing out that there is no interacting 4D QFT that fulfills the Haag-Kastler axioms yet, and that he does not see any reason to believe that there ever will be. Well, the advanatage of the axiomatic approach is that you can go back to the drawing board and learn what was wrong with the axioms, in such a case.

I would really like to learn if people who say that the Haag-Kastler axioms don’t describe (the Heisenberg picture of) realistic QFTs have specific ideas about what is wrong with the axioms?

Hi Chris,

You said, “QFT, as originally formulated, does not work when interactions are introduced. We therefore do not use it for this purpose. Instead our interacting theory comprises a set of invented fitting functions. Suggesting that these derive from quantum field theory is a nonsense as this would involve extracting unique finite values from the difference of two infinite ones. This is impossible, as all should know.”

No field theorist believes in QFT as it was originally formulated. The original formulation, as a Lagrangian or Hamiltonian with finite interactions is simply wrong. That’s why regularization (is this what you mean by “fitting functions”?) must be introduced, then removed. If you do this in the right way, there are no infinities (see below) and the result is unique (we believe). The modern “conventional” viewpoint is that this IS the QFT – the limit of a regularized theory. Most field theorists are very comfortable with this.

A regularized QFT in a finite volume has an interaction representation. There is a “Haag’s theorem” ruining the interaction representation, when crossing critical couplings, but we work away from these couplings, approaching them only as we remove the regulator. One never encounters anything infinite, provided renormalization is done this way. I want to stress that this is not the way Feynman, Schwinger or Tomonaga thought about QFT in the 1940s – that point of view is long obsolete.

My impression is that people who don’t like regularization are repelled esthetically. They feel regularizations are somehow dirty. I don’t know if this is the best analogy, but I think it’s like hating Newtonian sums as way of doing integrals, wanting some “purer” method e.g. Lebesgue integration. That’s great, if you know how to use the purer method to do calculations or prove theorems. Unfortunately, that’s a tall order for algebraic/axiomatic methods.

I think that the point of axiomatic or algebraic approaches is to avoid regularization and start with the theory which already has the (renormalized) S-matrix and Green’s functions. But the conventional approach has the same goal.

We have some understanding why results don’t depend on the regularization. We define the theory as a limit of the regularized theory, in which we take couplings to zero (in the case of QCD). There are different ways of doing this, but there is numerical and analytic evidence (though not a proof) that the result is unique.

Some algebraic field theorists are looking at conformal or massive integrable theories in 1+1 dimensions. I suspect that’s the best place for them to make progress, at least in the short term. These theories can be “constructed” (I am not sure that is the right term) with no regularization, but we know their S-matrices and something about their Green’s functions.

@Chris Oackley:

“this would involve extracting unique finite values from the difference of two infinite ones. This is impossible, as all should know.”

What about renormalization as a Birkhoff decomposition ? (work of Connes and Kreimer in the years 2000) ?

@peter orland

As a mathematician who has aesthetic issues with regularization I have a question – does the Riemann sum/Lebasgue analogy hold in that there are cases in the QFT’s you can’t handle, but perhaps you could handle with a better formulation? The Lebasgue integral is clearly better than the Riemann one, since it works for a (much) wider variety of functions, is there some hope one might get a formulation of QFT which did the same thing?

Jeff,

Maybe some new magical idea will appear, but the problem here doesn’t seem to be with the kind of integral you are using, but with the fact that the effective behavior of the fundamental degrees of freedom is changing as you vary the distance scale.

For asymptotically free theories like QCD, what is happening is that the behavior is approaching that of a free theory on distance scales going to zero, so in principle under better and better control as you go to zero distance. We believe there is a well-defined limit for these theories and have a conjectural precise statement about how it works (and the way it works isn’t just a technicality of properly defining a measure). Proving these conjectures though is hard (worth a million dollars from Clay), but the difficulty is not at short distances, but that you have to also understand what happens at large distances, where the physics is strongly coupled and perturbation theory is useless.

For non-asympotically free theories like QED, or the Higgs theory, and for non-renormalizable theories like gravity, the degrees of freedom are not weakly coupled as you go to short distances. So, understanding the continuum theory requires some precise understanding of the theory non-perturbatively, and we have very little to go on there. Maybe some new notion of “integration” will solve this, but it looks like it would have to be something much more powerful than a simple technical trick. People have tried many technical tricks, but I think the reason nothing has really worked is that your “trick” needs to essentially solve exactly a non-trivial quantum theory with infinite number of degrees of freedom, away from any limit we understand.

Jeff,

This is just to add to what Peter W. has said:

To answer your question as to whether one can do better than the standard approach – it isn’t clear. There are (non-rigorous) exact calculations in some field theories with no regularization at all. These exist only in one space and one time dimension. There is no powerful general method, except in the conformally-invariant case. In the massive case (sine-Gordon, nonlinear sigma models, Gross-Neveu models), a lot of guesswork is involved, though the results are remarkable.

Such techniques don’t generally work in higher dimensions, though there is good reason to be optimistic for conformally-invariant supersymmetric gauge theories.

Thanks Peters. First Peter, I wasn’t thinking some clever new integral might work, just using it as an analogy, my thought being that perhaps one might come up with a new way of looking at things (as Lebasgue did) to eliminate the problems. Second Peter, I guess from what you say that this can be done, but only in 1+1 dimensions, which I would think are quite far from being in any way generalizable. At least in my field 2 dimensions is very very different from 3, which is very different from 4+…

I was watching Arthur Jaffe’s Simons Center talk the other day. He motivated work on QFT by the enormous success of QED in predicting g-2, and then went on to lament the lack of effort devoted to rigorous algebraic results. To me, this seems like a rather strange argument. We strongly believe that QED is just an effective field theory, so presumably there will never be a rigorous algebraic construction of QED.

What also strikes me as slightly odd is that we do think that Wilson successfully “constructed” QCD, as the continuum (and infinite volume) limit of the lattice regularized version. It would seem like an interesting research program for mathematically inclined folks to show that the limit exists, and that is satisfies certain axioms. (In fact, it seems to me that this would be the most promising avenue towards proving confinement, because we already know that the theory is confining at strong coupling). But somehow this is not emphasized all that much, possibly because it does not really fit in very well with the emphasis on algebraic methods.

thomas,

What you suggest is exactly what many mathematical physicists have tried to do, and I’d think it’s considered the only known path to getting the million dollars. The reason you don’t see papers about it is that no one has a good idea about how to do this. On a fixed finite lattice you have good control over the weak and strong coupling limits, but this isn’t good enough. The problem seems to be that no one understands well the physics of how the weakly coupled behavior crosses over to the strongly coupled. There are ideas about large N limits, but for fixed N you just don’t have a good idea. Lacking any real “physical” argument for what is going on, finding a rigorous mathematical argument is probably hopeless.

One example of this kind of thing is the Tomboulis paper discussed back in 2007 here

http://www.math.columbia.edu/~woit/wordpress/?p=576

and here’s a later critique of it

http://arxiv.org/abs/0901.4246

Work like the Tomboulis paper is indeed what I had in mind. I was mainly commenting on the fact that the Simons people organize a meeting on the Foundations of QFT and it appears that this sort of thing is not even discussed (they did, admittedly, have a talk by Kaplan on lattice fermions and lattice SUSY) .

Peter

On following your link to CERN book reviews, I downloaded John Marburger’s Constructing Reality on the basis of the review. For the non-physicist a demanding but very engaging overview on QT and particle physics. Interestingly, in a long book he only devotes two or three paragraphs to ST, and in chapter 6 – The Machinery of Particle Discovery – he references ST explicitly, but the footnotes take the reader only to Greene’s The Elegant Universe, and to yourself and Lee Smolin who “approach string theory with much insight and considerably less enthusiasm”.

Balance at last?

harryb,

Thanks, I haven’t seen that book. On the whole discussion of string theory has been much more balanced the last few years, for whatever reason…

If philosophers can’t solve the technical problems of QFT, why not let the lawyers have a crack at it?

Do physicist not become very nerveous because of the open consistency questions in quantum field theory? Friends whom I had asked compared with the (for ca. a 1.5 centuries) unsettled similar problems with analysis in the past, but the intensity and time frame would put it more properly (and more uneasy) near the centuries of unclear foundational issues in geometry in the past. And until those open issues are not really solved, does physics not risk to run into a dead end, as one needs enough theory to learn what data tell – when I read about waiting for accelerator’s or astrophysical data, I wonder if the situation could be actually worse. (I.e. even if current theories fit reality, only with missing better theory one can find that out). The “practical standpoint” one reads about sounds to me wrong, e.g. it happened science history in the disputes between ptolemaic and kopernican astronomy, where real insight and progress came with the “unpractical theory” only.

I totally agree. If you are going to botch it (and I am not necessarily demeaning that activity), it should be with the simplest thing available, which is why I am not in favour of (e.g.) the Epstein-Glaser technique or Hopf algebras. I challenge anyone who thinks that “regularizing” pathologically divergent integrals is an acceptable activity to formulate their theory in a way that avoids mention of divergent integrals at all. Introducing extra, spurious parameters that were not present at the outset is not allowed.

@Chris – But is renormalization not very nicely in the process of becomming clear, in the work of Connes, Kreimer, Marcolli?

@Peter Woit: “non-renormalizable theories like gravity” – what do yo think about Kreimer’s idea that it may be renormalizable?

thomas,

I don’t know what idea of Kreimer’s about quantum gravity you’re talking about, and I don’t think recent work by Connes, Kreimer, Marcolli resolves the problems with consistency of QFT that are serious worries. They’re all studying the perturbation expansion as far as I know, and I don’t see how you can get a fully consistent theory even for QED that way.

Hi Peter,

yes, that work on renormalization fits probably better into the process of extracting interesting “platonic ideas” from quantum field theory and where they arise in pure mathematics, but not into those consistency questions. My impression is that one can say that such use of ideas from physics just extends what the antique greek geometers did with intuitions about space coming from our biology for doing geometry and even Kant struggled much later with distinguishing mathematics, “platonic ideas”, physics (I just remember that this confusion extends to Bertrand Russel too, his PhD-thesis was about “proving” from first, nessessary principles of space-concept constraints for the physical universe).

Kreimer’s remarks on quantum gravity are linked to in his website: http://www2.mathematik.hu-berlin.de/~kreimer/index.php?section=research&lang=en

And the arxiv has some articles by Jack Morava on physics, I would like to know what physicists think about it (in mathematics, his genius is so highly esteemed that I can’t imagine that his idas on physics are not deep and interesting too): e.g. http://arxiv.org/abs/math/9908006 , http://arxiv.org/abs/math/0407104 , http://arxiv.org/abs/1001.0965

@Chris Oakley wrote:

“I challenge anyone who thinks that “regularizing” pathologically divergent integrals is an acceptable activity to formulate their theory in a way that avoids mention of divergent integrals at all.”

The Epstein-Glaser method precisely meets your challenge. To see that, one need only study it. Other, more conventional methods, whether involving divergent integrals, also lead to the exact same result. Though, if extensive discussions on spr did not convince you of the soundness of renormalization, a few blog comments won’t either. However, the false implication made in your comment is worth correcting.

Ivor,

As I understand it, the Epstein-Glaser method involves inserting c-number functions which generalise the otherwise-badly-behaved time-ordered product expressions that appear in the perturbation expansion. A limit is taken with these functions to obtain a finite “physical” result.

The renormalization I was taught (in Cambridge, 30 years ago) on the other hand typically involves cutting off an integral, and the limit one takes later is with respect to this cutoff parameter.

Whilst I cannot argue that the Epstein-Glaser approach is more highbrow, it still amounts to the same thing: introducing extra degrees of freedom midstream, and taking some kind of limit. Although one may think one can get a unique answer, the fact is that by applying a little creativity one can get any answer one wants. This makes the exercise little more than the application of invented fitting functions. I see no axiomatic basis.

Enough about renormalization, please. At this level of arguing about whether perturbative divergences in general are

1. A serious problem indicating the theory is ill-defined

2. Things that can be eliminated with mathematical machine X

3. Not there if you use the renormalization group properly

the discussion is stuck in a 40-50 year old time warp. I don’t think that endlessly repeating geriatric arguments is fruitful.

This is probably very naive, but aren’t we blurring the boundaries here between showing existence of a QFT and actually solving the QFT? For QCD, isn’t existence pretty much a consequence of the property that the theory has a well-defined continuum limit (which is a consequence of asymptotic freedom)? Do we have to actually have to require, as you state, Peter, that someone solve the collective degrees of freedom of the low energy regime to know that the theory is well-constructed? And if so, why draw the line at the energies at which low energy particle experiments are done, as opposed to, say, requiring a first principles construction of, say, fluid mechanics, or the folding of hemoglobin, from QCD, before we will accept it as mathematically rigorous?

Anon,

The problem is that you have to show that when you take the continuum limit you get a non-trivial theory. If you don’t know anything at all about the infrared behavior when you take the continuum limit it’s possible that in the limit you get a trivial theory in which all physical degrees of freedom have masses that go to infinity. Or all masses are zero (this is what is supposed to happen for the N=4 supersymmetric case).

The Clay problem is carefully worded, just asking for proof of a finite mass gap. This is pretty much the minimal information you would need to see that you have something non-trivial.