Since I don’t see why Resonaances should have all the fun, I guess I’ll post something here about the big upcoming news of the summer: is the 750 GeV diphoton bump still there in the 2016 LHC data? We’re very soon about to hit a major fork in the road for high energy physics: if the bump is there, the field will be revolutionized and dominated by this for years, ft it’s not, we’re back to the usual frustrating grind.

Last year’s tentative signal was based upon 3.2 inverse fb of 2015 data, and as of today the experiments have over 6 inverse fb of new 2016 data. One can guess that within ATLAS and CMS, plots have started to circulate of preliminary analyses of some sizable fraction of the new data, and some number of people now know which fork we’re headed down, a number that will grow to 6000 or so in coming weeks.

If you’re not one of those, you could try accessing this data indirectly, using a model of how the CERN administration works. According to this presentation at LHCP this past weekend

the next major update of physics results from the LHC is at the ICHEP 2016 Conference, August 2016, Chicago

and

CERN management is in regular contacts with the experiments’ Spokespersons. It is agreed that any significant (i.e. discovery-like) result (such as the 750 GeV bump becoming a signal or other) has first to be announced in a seminar at CERN.

No detailed schedule yet for ICHEP, but the first day of plenary talks there is scheduled for Monday August 8. My CERN-modeling suggests that the two forks in the road will correspond to the following two possibilities

- A “special seminar” in Geneva mid to late July, where a “discovery-like result” will be announced jointly by the CERN DG and the two experiments.

- A pair of seminars in Chicago on August 8 or shortly thereafter showing off bump-less plots. Much of the drama will be gone by then, since not all 6000 physicists will have kept quiet, and everyone will realize that if “discovery-like” was going to happen, it would have happened earlier.

It may become clear which fork we’re taking relatively soon, since the “special seminar” route takes some planning and will get announced in advance. If there’s no such news by mid-July I think it will be clear we’re headed down the boring fork in the road.

Unfortunately, life being what it is, that’s the most likely one anyway. To supplement this CERN-administration-modeling, you can watch the comment section at Resonaances, where rumors of no bump have already started to appear…

Update: Now, it’s an official rumor (since it’s on Twitter). I can add to this that, of the rumors I have heard, there have been no rumors that this official rumor is not an accurate rumor.

Yeah, I was going to say the rumour is that the bump has gone.

Jester has hinted in the comments on his blog that the bump is indeed going away:

“Many papers, including some written by the winners, made interesting contributions that will live longer than this particular bump. So this post is self-irony through the tears, rather than slut shaming as interpreted by some.”

On the bright side, the LHC is coasting along magnificently, churning out 2/fb/week despite the dump leak in the SPS. ICHEP could be presenting 15/fb of data in total, 30/fb in total by the end of 2016 assuming no more curious fouines.

Peter,

I am interested in how your “CERN-modeling” works. Would you be willing to share some details, or are your algorithms proprietary?

The model is quite simple: it just says that if there’s a “discovery”, they will reveal this at a special seminar at CERN, announced a week or so in advance. If there’s a let-down non-discovery, they will announce at a previously scheduled conference outside CERN. This modeling is based on past observations and basic understanding of how a good PR operation works. I do think it’s a pretty powerful model: once you know the announcement plan, you know the result.

Of course my confidence level in the model may depend on proprietary algorithms, although these are more relevant to evaluating rumors…

“One can guess that within ATLAS and CMS, plots have started to circulate of preliminary analyses” – do we even know reliably whether the data has been unblinded?

Dismaying for BSM hopes, but still just a rumor. Perhaps disseminated to put the bloodhounds off the scent trail?

anon, ATLAS had a meeting on June 16 at 13:00 and CMS on June 20 at 17:00: it’s impossible to keep information private among 3000+3000 persons, especially when not everybody agrees on a selective policy for announcing data. They risk that somebody could go beyond a tweet and post the digamma spectra on a blog. Anyhow, another rumor is that a few years ago, in one analysis, higgs to gamma gamma disappeared for a while, until it reappeared with more data. Diphoton data are growing fast, already now they have twice the data analysed so far.

@M, should I take that as confirmation that the data have been unblinded from someone inside an experimental collaboration?

In regards to M’s comment above, I, too, seem to recall that at one point, the Higgs digamma signal in CMS fluctuated downward quite a bit in one batch of data. I can’t remember if this was before or after the 2012 discovery announcement.

My little bird in CERN told me the bump has gone in 2016 data

I am wondering if it is commonly understood amongst particle physicists that this is the expected behavior of p-values. If there is no signal, the p-value should not be expected to converge onto any specific value as more data is collected. It will random walk between 0 and 1; ie if the null hypothesis is true the p-value is a sample from a uniform distribution. See for example the simple simulation output in figure 2 here (you can easily run your own simulations as well):

http://pss.sagepub.com/content/22/11/1359.full

Confused,

Particle physicists do have a quite sophisticated understanding of these issues, this is a central part of what they do, you’re mistaken if you think they’re not quite aware of this. The problem is that they do this a lot: a huge number of very different analyses get performed, so it is tricky to characterize probabilities of seeing a certain size fluctuation at a certain location. Then there are two independent experiments, if they see something at roughly the same location, how do you take into account the significance of that?

The bottom line here is that, however you quantified it, last year’s data contained a potentially significant signal, although one not large enough to be convincing. With this year’s data, the situation is just about binary: with pretty high probability,if there’s something there, the signal should get larger and become convincing, if not its significance should recede. The rumor is that the latter has happened, with details still to come.

Pingback: Blog - physicsworld.com

I’d like to have seen a Bayesian analysis of the combined data. My feeling is that the complicated statistical analyses (local vs global and LEE, and the lack of a reliable combination of ATLAS and CMS data) contributed to the hype and confusion about a new particle. I think this mess would have been partially avoided with e.g. Bayes-factors for a diphoton toy model vs the SM.

Bayes_or_bust,

I don’t think a Bayesian or other analysis would have changed anything, other than giving people yet more different inconclusive ways of characterizing the significance of the bump.

What has surprised me about the LHC results has been how rare things like this are. If you look back at the history of HEP experiments you can find a long list of interesting looking, but not quite convincing effects that have appeared and then disappeared. This is just part of how this kind of science works. Given the huge number of different analyses going on at the LHC, I’d have expected more such stories. The experiments have done an unusually excellent job of trying to be rigorous about their statistical methods.

The reaction of the theory community is a different story, see the discussion at Resonaances. There the way the field is intensely starved for anything new led to this getting an unusual (and, it seems, unjustified) amount of attention.

@Peter Woit

Of course the real question is whether or not you would be prepared to kill someones dog if your CERN PR model was falsified. 🙂

Bayes_or_bust,

Before calculating the Bayes-factor for a diphoton toy model, you have to decide which toy model(s) to consider. How would you do this?

I would think that you would want to use one that existed and was deemed “reasonable” prior to observation of the diphoton excess. That would be to avoid models that have since been tuned or selected to fit the observation. However, I’m not sure any such models existed.

The statistical methods we’ve developed are robust to all of this… that oft-decried 5-sigma standard of evidence is a pragmatic way to get around the trickiness of the 3-sigma jumps and bumps that should happen occasionally when performing hundreds and thousands of analyses. We just need to stick to the decision! Instead, we collectively lost our minds over a tantalizing hint, and will subsequently pay the price if it is falsified.

It’s like playing Texas Hold’Em. You’ve got a pocket pair of aces. Yes, it’s a good hand pre-flop. But, it’s not enough to bet your house on, there are 5 cards to play. At least wait until the turn! In science, we should really wait all the way until the river in order to call the game ;).

Actually, Sal, you kind of want to push people off those pocket twos before the flop, just in case, which does justify a pretty aggressive bet pre-flop.

Maybe this explains string theory PR?

@GS I think a toy model could have been made. @Peter it’s not a lack of rigour that I dislike. It’s the complicated frequentist techniques and lack of an official combination of ATLAS and CMS data.

Even now @jester’s blog you can see confusion about this. No one knows the combined global significance for that search. And they worry they’ve been fooled by the sheer number of analyses.

Suppose we knew the Bayes-factor and it said: diphoton toy model 5 times more plausible after 2015 data. Don’t you think that would alleviate confusion? No global/local/LEE to contend with.

That said, the decisions people make to to e.g. make a paper or get excited, are based on many factors beyond the plausibility of the new physics model.

Bayes_or_bust,

No, “diphoton toy model 5 times more plausible after 2015 data” would add to, not reduce confusion, by introducing a host of new complications (which toy model? how do you get the “5 times”?).

The experiments I think do a good job of quantifying what can be quantified. Beyond that, it’s a matter of judgment and experience, the importance of which can’t be eliminated by more sophisticated statistical analysis. My impression is that a typical expert reaction to the diphoton plots and to the kind of numbers quoted for statistical significance is something like “yes, looks like there could very well be something there, but I can think of several cases in the past when bumps like that went away with more data”. You can assign all sorts of numbers to this situation, but the bottom line is that the 2015 data was not convincing evidence, especially not convincing evidence for an extraordinary claim (completely new, never before seen physics not part of the standard model). Extraordinary claims require extraordinary evidence, and that wasn’t there.

What’s interesting about this situation is that the new data should with high probability resolve the issue. If there really is a state with that kind of cross-section, we should see convincing evidence soon. If there isn’t, the new data should sizably reduce the significance of the bump. Landing in the middle zone isn’t especially likely.

The Bayes-factor (I said 5 times for an example) would be calculated in the usual way. I agree there would be questions about which toy-model was tested against the SM, but even in the frequentist log-likelihood ratio methods currently used for the diphoton analyses, one has to make an alternative model to test. So I don’t see it as problematic. But we’ll have to agree to disagree, I think.

Peter and others,

Is there any known plausible theoretical reason for the existence of a heavy resonant state at 750 GeV ? That would certainly make it a-priori more likely to exist.

After all, the Higgs discovery was extremely well-motivated theoretically around *half a century* before it was discovered.

Dear anonymous, the Theory of Anything univocally predicts 10^500 different vacua. About 10^350 of them are indistinguishable from the Standard Model, and about 10^345 of them have a diphoton resonance at 750 GeV.

One thing that bugs me a bit is why all the particle physicists got so thrilled by the news of the bump. How can it be good to discover something we don’t understand? Effectively, that increases our ignorance about the world. Surely the aim is to decrease our ignorance, by making sense of the data we already know. A new particle just pushes us further from that goal. I appreciate a new particle would have given them something to work on, but that’s not the point – there’s already plenty of stuff to work on: go solve the hierarchy problem, or dark matter if you’re short of something to do,

Another Anon

Unexpected findings do not increase our ignorance they reveal our ignorance. Which is the first step in reducing our ignorance.

Good point. But I don’t think I’d be so happy to discover I was ignorant.

@ Another Anon: Then you apparently do not have a soul of scientist.

PS Thinking about new unexpected stuff may help you to find clues to other difficult phenomena.

At one time, wouldn’t something like 2/3 of the particles we already observe have been completely unexpected? Of course, I suppose today we have much better constraints on where something unforeseen might be lurking. Still, I’m surprised and chagrined at how risible some in the blogosphere seem to think the activity and excitement around the 750 GeV bump has been. It’s not like HEP theorists have had much else that was obviously better to do lately.

LMMI,

The main blog making fun of this has been Resonaances, whose author himself has papers on two different models explaining the bump. There’s a reason he calls himself “Jester”, and I think a sense of humor about this whole situation is not out of place…

I do get the humor, sort of. Given the abject starvation for new data theorists are experiencing, it’s certainly better for one’s sanity to laugh than to cry. But there’s also some accusatory chatter about schlock being dumped into the arXiv to drive up citation counts, people ruining their own Christmas, etc., and all that seems kind of cruel to me. I mean, HEP theorists may be treading a path into the driest desert imaginable. The way things are going, there may literally be nothing for the next Feynman or Gell-Mann or Weinberg or Wilczek to sink his or her teeth into…EVER. So, for pity’s sake, why look askance at people burning a few hours and electrons on a potentially vanishing dream. At least they’re theorizing about an actual result. Spurious result or not, it’s no anthropic landscape.

“I do think it’s a pretty powerful model: once you know the announcement plan, you know the result.”

So you are saying that you can make predictions based on observation, and that the results are falsifiable.

this aligns nicely with the rumors i have been hearing.

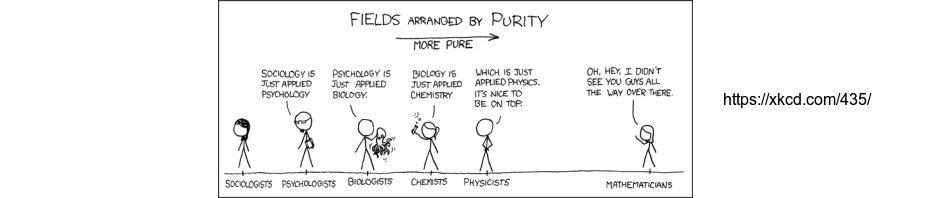

I think this can all be explained with sociology/game theory.

If I remember properly, before the LHC came on, physics departments increased the number of phenomenologists they were hiring, because they thought there was a substantial probability that with the LHC, this field would become more important.

So now we have all these untenured phenomenologists, all of whom are desperate to write a significant paper in the next few years. It would be foolish of them not to publish explanations for any possibly significant bump discovered at the LHC, on the off-chance that the bump is real and their paper is correct, winning them the tenure lottery.

>> all these untenured phenomenologists

In seems that the ‘worst case scenario’ is becoming reality: The LHC saw the Higgs but nothing else.

So what now?

Another Anon wrote:

> But I don’t think I’d be so happy to discover I was ignorant.

Opinions may vary, but I already feel extremely ignorant about particle physics. There are lots of mysteries in this subject, from why such a complicated theory as the Standard Model seems to work so well, to what’s the explanation of dark matter (a new particle not in the Standard Model?), inflation (another new particle?), dark energy, etc. etc. Since I’m completely stuck on trying to figure these things out, any further clues would be great.

When will they announce the spin of the Higgs-like particle that got announced in this way in 2012?

I was hoping the bump won’t go away, but grow into a signal.

Pingback: Physics and Math News | Not Even Wrong