Since I had a little free time today, I was thinking of writing something motivated by two things I saw today, Sabine Hossenfelder’s What’s Going Wrong in Particle Physics, and this summer’s upcoming SUSY 2023 conference and pre-SUSY 2023 school. While there are a lot of ways in which I disagree with Hossenfelder’s critique, there are some ways in which it is perfectly accurate. For what possible reason is part of the physics community organizing summer schools to train students in topics like “Supersymmetry Phenomenology” or “Supersymmetry and Higgs Physics”? “Machine Learning for SUSY Model Building” encapsulates nicely what’s going wrong in one part of theoretical particle physics.

To begin my anti-SUSY rant, I looked back at the many pages I wrote 20 years ago about what was wrong with SUSY extensions of the SM in chapter 12 of Not Even Wrong. There I started out by noting that there were 37,000 or so SUSY papers at the time (SPIRES database). Wondering what the current number is, I did the same search on the current INSPIRE database, which showed 68,469 results. The necessary rant was clear: things have not gotten better, the zombie subject lives on, fed by summer schools like pre-SUSY 2023, and we’re all doomed.

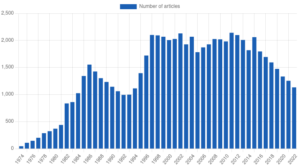

But then I decided to do one last search, to check number of articles by year (e.g. search “supersymmetry and de=2020”). The results were surprising, and I spent some time compiling numbers for the following table:

These numbers show a distinct and continuing fall-off starting after 2015, the reason for which is clear. The LHC results were in, and a bad idea (SUSY extensions of the SM) had been torpedoed by experiment, taking on water and sinking after 20 years of dominance of the subject. To get an idea of the effect of the LHC results, you can read this 2014 piece by Joe Lykken and Maria Spiropulu (discussed here), by authors always ahead of the curve. No number of summer schools on SUSY phenomenology are going to bring this field back to health.

Going back to INSPIRE, I realized that I hadn’t needed to do the work of creating the above bar graph, the system does it for you in the upper left corner. I then started trying out other search terms. “string theory” shows no signs of ill-health, and “quantum gravity”, “holography”, “wormhole”, etc. are picking up steam, drawing in those fleeing the sinking SUSY ship. With no experimentalists to help us by killing off bad ideas in these areas, some zombies are going to be with us for a very long time…

Update: There are a couple things from Michael Peskin that are relevant to this that might be of interest: an extensive 2021 interview, and a very recent “vision statement” about particle physics. Peskin’s point of view I think is exactly what Hossenfelder is arguing against. He continues to push strongly for a very expensive near-term collider project, and doesn’t seem to have learned much of anything from a long career of working on failed ideas. I remember attending a 2007 colloquium talk here at Columbia where he put up a slide showing all the SUSY particles of masses a few hundred GeV that the LHC was about to find, and assured us that over the next 5-10 years we’d be seeing evidence of a WIMP from several different sources. According to the interview, in 2021 he was working on Randall-Sundrum models (see here) and wondering about the “little hierarchy problem” of why all the new physics was just above the LHC-accessible scale rather than at the electro-weak breaking scale. I very much agree with some of his vision statement (the Higgs sector is the part of the SM we don’t fully understand, and the argument for colliders is that they are the only way to study this), but his devotion to failed ideas (not just Randall-Sundrum, but string theory also) as the way forward is discouraging. In the interview he admits that it’s looking like post-LHC abandonment of particle physics is the most likely future:

I think if you were a betting man, you would bet that LHC will be the last great particle accelerator, and the whole field will dissipate after the LHC is over.

Most of his prominent colleagues have the same attitude that the subject is over, and have already moved on, unfortunately to things like creating wormholes in the lab.

It looks to me that your graph clearly shows the fall started in 2012, a huge year for the LHC, with a hopeful blip in 2016, one year after the LHC restarted in 2015.

Perhaps the experimental result of thousands of theorists coming to the ends of their career and realizing they have nothing to show for it will eventually start to tell….

Hi Peter,

Thanks for digging up these numbers, which is very interesting. A lot of people in hep-ph have been shifting weight to axion-like particles and general hidden sector things, branching out into astroparticle physics and sprinkling machine learning over it for good measure.

Unfortunately, that doesn’t solve the problem in the community, it just moves it elsewhere. Since they never acknowledged that their LHC predictions went badly wrong, they’re doomed to repeat their mistakes.

The situation in particle physics is very comparable to the reproducibility crisis which they had in psychology. Both were driven by bad scientific methodology that had become normalized. Psychologists managed to mobilize the community and introduce better methodology. And, amazingly enough, this was a bottom-up effort, purely community driven.

I sincerely hope that particle physicists can get their act together and do the same thing. A lot could be gained in this community if everyone had a basic education in the philosophy and sociology of science. And yeah, it would to hurt for a few years, but I believe that particle physics stands much to gain from it.

Whatever mistake or bad strategy particle physicists (especially theorists) might have committed or followed, particle physics will naturally correct itself as physics has the most powerful feedback control mechanism in science: experiments.

Nature as expressed through experiments will tell us whether it has implemented SUSY or not!

In this respect, physics is fundamentally different to psychology, and I disagree with Sabine. Psychology experiments are very different to physics experiments, because in psychology you have to control for the informational content in people’s brains. Every electron and quark is identical to any other electron and quark, whereas every human being in an experiment is different in so many ways, especially in its informational content of the brain which shapes behaviour. Moreover, the crisis in psychology was not a crisis in theory building but in using sloppy statistics (p-values) to overinterpret experimental results, whereas particle physicists are extremely carefull and accurate in its statisical interepretation of experimental data.

The wild model building bonanza is only due to a lack of experimental constraints.

“So what will happen to particle physicists? Well, if you extrapolate from their past

behavior to the future, then the best prediction for what will happen is:

Nothing. They will continue doing the same thing they’ve been doing for the past 50 years. It will continue to not work. Governments will realize that particle physics is eating up a lot of money for nothing in return, funding will collapse, people will leave, the end.”

Sabine I think your extrapolation is wrong?

I suspect particle physicists have changed their minds but not conceded it as Peter shares: “These numbers show a distinct and continuing fall-off starting after 2015, the reason for which is clear …” Now the burden of proof to show that every spin-off idea is equally unpromising (as they are “they’re doomed to repeat their mistakes”) is still an argument to be made? I wouldn’t be surprised if this was indeed the case (at least for string theory) but I think data helps and prevents us when we make blanket statements

More Anonymous

@Tom Weidig: to be fair to Sabine, I think she may have meant questionable statistical practices like p-hacking, mis-interpretation of p-values etc when she said “bad scientific methodology that had become normalized” (perhaps Sabine would like to comment ?).

She’s right that psychologists “managed to mobilize the community and introduce better methodology”. These include the emergence of Registered Reports (RRs), which guarantee publication of research on the basis that the methodology is refereed and approved _before_ the study takes place, rather than afterwards.

By decoupling publication from the level of p-value achieved, RRs to date suggest that research in psychology _does_ have a problem with theory building, in the sense of failing to propose hypotheses leading to effect sizes convincingly above noise level. So they’re not so far from HEP theorists really.

As someone once said, science is _hard_, but that’s no excuse for persisting with stuff that’s long since keeled over and died.

I agree that the current experimental results are not encouraging. However, I was always impressed when I went to SUSY talks by the graph showing the three standard model coupling constants varying with energy and almost meeting at around 10^15 GeV. When SUSY was included they all meet at exactly the same place. Do you feel that this was never a convincing argument?

All,

Please stick to the problems of particle physics, which are a big and complicated issue. A sensible discussion of the larger problems of other sciences and social science would be something way beyond my ability to moderate.

Laurence Lurio,

I wrote extensively about this in my book and on the blog, agreeing that it’s probably the best argument for SUSY extensions of the SM, but is still extremely weak and unconvincing. It’s an argument that is often made in a misleading way.

Technically, one problem is that the graph often presented is a one-loop calculation, ignoring the fact that two-loop calculations make the “three lines join at a point” significantly less true. To really do this calculation, you need to use updated LHC limits (the SUSY-change in evolution can’t start until the scale at which you see SUSY), you need to include higher-loop contributions, and you need to discuss “threshold effects” of the unknown physics of new Higgs bosons you have to introduce at the GUT scales. With all this taken into account, I don’t think there ever was a very impressive “three lines join at a point”, just “they join better than with no SUSY”, which is something different. I haven’t looked into this recently, curious if someone knows of an up to date accurate calculation.

The big problem with any such calculation though is that its assumptions are implausible and discredited by experiment. For the highly implausible, it assumes the “desert hypothesis”: no new physics in between the EW breaking scale and the GUT scale. For discredited, GUTs (SUSY or otherwise) generically predict proton decay rates in conflict with observation.

In addition, beyond being implausible and in conflict with experiment, GUTs have fundamental generic problems of just adding a lot of complex structure to the SM, while not explaining anything. What you are doing is postulating a larger symmetry, but then not getting anything out of it since you have to add a complicated symmetry breaking structure to explain why we don’t see evidence of this new symmetry. SUSY by itself has the same generic problem in spades (you postulate a new symmetry making your theory more predictive, the predictions are wrong, so you have to add a complicated mess purely to get rid of the new predictions of the symmetry). These problems have been around since long before the LHC put the final nails in the coffin of this subject.

I think the downward trend in Supersymmetry papers would be more meaningful if compared to a baseline (e.g., compared to number of papers on Higgs, QCD, neutrinos, dark matter, or some such). I don’t know what the appropriate comparison would be – perhaps QCD? – or else I would try myself.

What happened c. 1985? There’s a similar downward trend that only reverses around 1994, perhaps sparked by developments around the Second Superstring Revolution. Then again, the earlier downswing would seem to coincide with Green-Schwarz and the First Superstring Revolution, so maybe Strings can’t explain these trends in particle physics so neatly.

To pick a nit, the graph looks to me as if 2015 was a singular outlier in a trend that actually started in 2011. But this is still compatible, in fact possibly even more compatible, with the explanation that the LHC permanently altered the trajectory of the field.

(In fact 2011 is either the highest point, or tied for highest with 2002. One might not unreasonably read this graph as a steady underlying increase leading up to 2011, with two superimposed waves of excess enthusiasm in the run-up to 1986 and 1997 – sound familiar? –, and then a steady underlying decrease since.)

Thank you for noticing this and pointing it out. It was a rare piece of genuinely heartening news, even ill as it bodes for the dispatch of less experimentally tractable zombies.

Anonyrat,

I think taking a ratio with some other keyword with its own history is just going to confound different phenomena.

What’s remarkable to me about the SUSY numbers are how little they changed from 1997 to 2015, which indicates a very large and stable industry of people consistently pumping out “SUSY” papers. Something happened to wreck the health and stability of this industry and it’s pretty clear what it was.

LMMI,

The first peak is definitely the “First Superstring Revolution”, where for several years everyone was writing papers about how SUSY models derived from the superstring were going to unify physics. Not sure of what the dynamics was during the mid 90s driving up the numbers to the stable situation the subject then entered for a very long time.

A more accurate plot done with bibliometric codes takes into account these factors:

– The overall number of papers/year increased significantly.

– Papers on “MSSM” or “gluino” or “sugra” are on supersymmetry even if it’s not mentioned, etc.

This shows a decline of “SUSY&co” and of “string&co”. “Higgs” peaked and it’s now declining. “DarkMatter&co” grows.

I wish people would stop saying “particle physicists”. This discussion is more about particle theorists. Experimentalists might not have their backs up so much by some of the comments in popular media if this distinction was made…

On SUSY the LHC was very successful – we were told we would produce an abundance of gluinos and squarks when we turned it on. We made some rather difficult measurements that showed almost straight away that prediction was wrong.

Hello Peter,

If I had to guess, I would say that Witten’s 1995 announcement of the equivalence of the various string theories through S and T dualities and M-Theory is the reason for the late 90s spike. Also you had Maldacena’s AdS/CFT paper in 1997/98. These two milestones alone probably contributed significantly to the number of papers mentioning “supersymmetry”.

Regards,

A Humble Grad Student.

Comment on traversable wormholes

https://arxiv.org/abs/2302.07897

@Robert Matthews, I don’t believe experimental particle physics has a statistical reproducibility problem per se. We publish null results, and have for decades–null results are informative, so publication hasn’t been tied to p-values. More recently, most experiments have adopted strong measures against p-hacking–analyses have to be fully defined using simulated data, and results (especially major results) are routinely blinded.

What Sabine generally complains about is mostly the generation of theoretical models, and experimentalists using those theories to justify new and bigger machines. I’m unconvinced that the problem there is substantively analogous to the reproducibility crisis in psychology, nor as easily solved as pointing out bad statistical practices.