NEW: EXECUTIVE SUMMARY

Michael Thaddeus

Professor of Mathematics

mt324@columbia.edu

February 2022

Rankings create powerful incentives to manipulate data and distort institutional behavior for the sole or primary purpose of inflating one’s score. Because the rankings depend heavily on unaudited, self-reported data, there is no way to ensure either the accuracy of the information or the reliability of the resulting rankings. — Colin Diver

§1: A DIZZYING ASCENTNearly forty years after their inception, the U.S. News rankings of colleges and universities continue to fascinate students, parents, and alumni. They receive millions of views annually, have spawned numerous imitators, spark ardent discussions on web forums like Quora, and have even outlived their namesake magazine, U.S. News & World Report, which last appeared in print in 2010.1

A selling point of the U.S. News rankings is that they claim to be based largely on uniform, objective figures like graduation rates and test scores. Twenty percent of an institution’s ranking is based on a “peer assessment survey” in which college presidents, provosts, and admissions deans are asked to rate other institutions, but the remaining 80% is based entirely on numerical data collected by the institution itself. Some of this is reported by colleges and universities to the government under Federal law, some of it is voluntarily released by these institutions to the public, and some of it is provided directly to U.S. News.

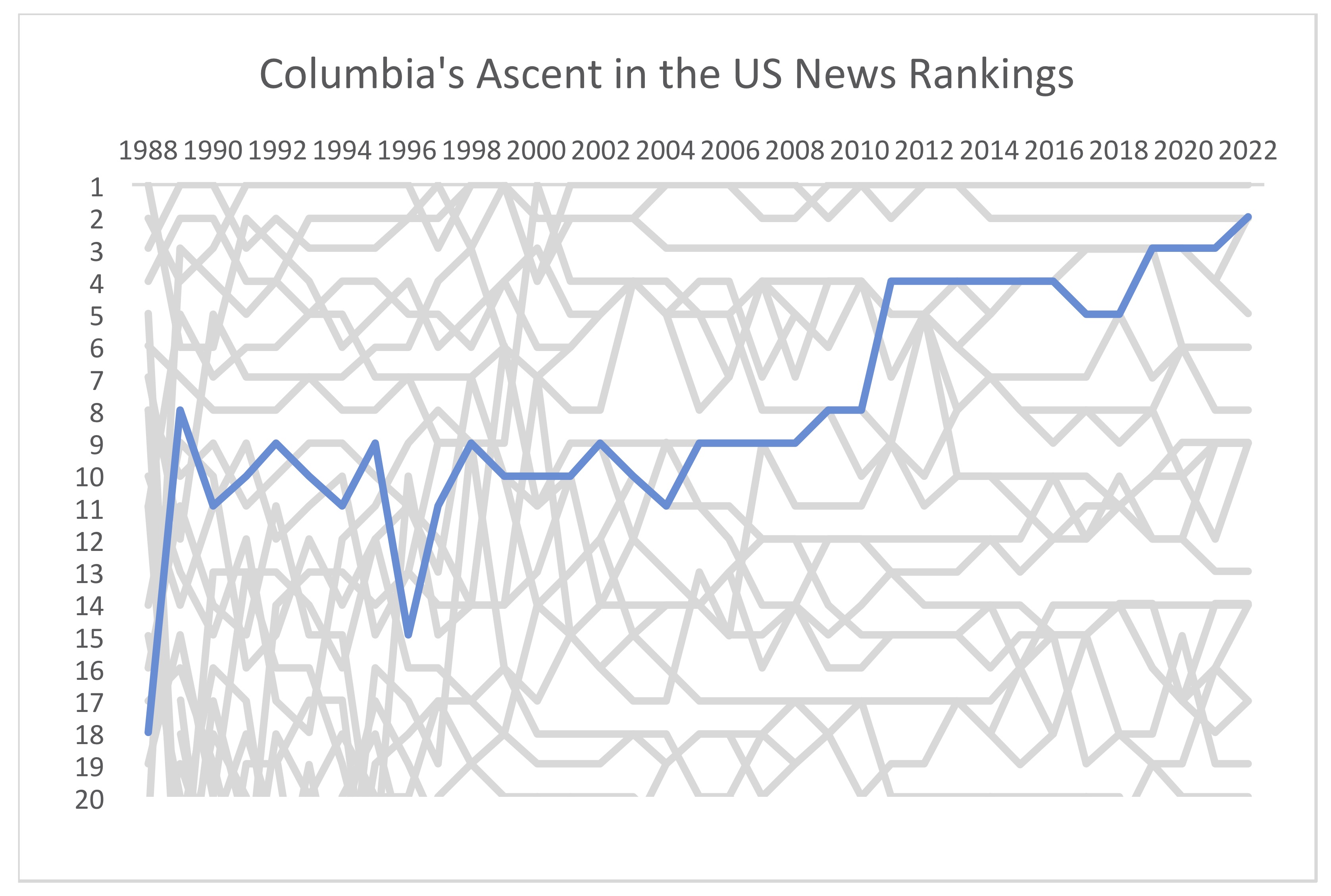

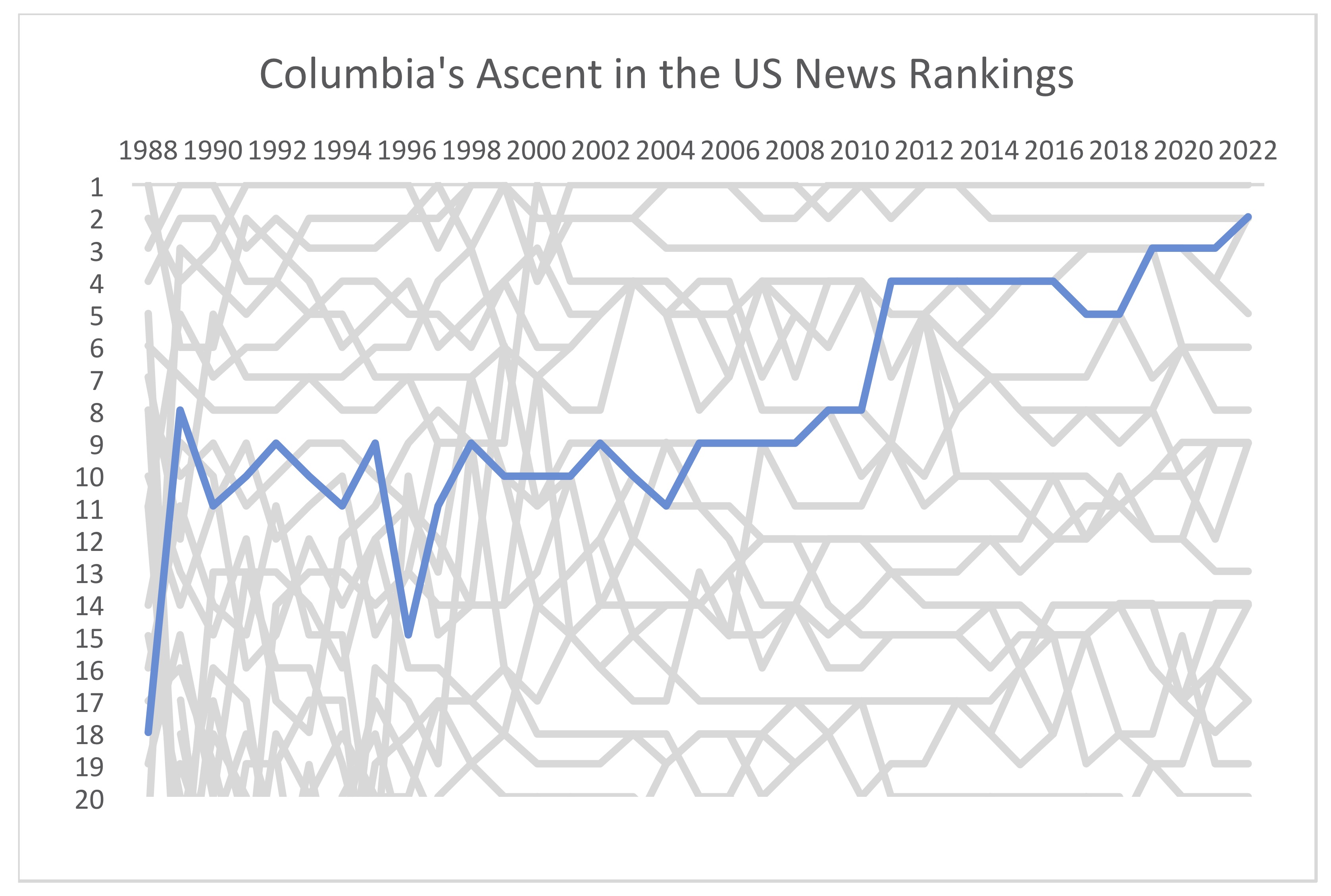

Like other faculty members at Columbia University, I have followed Columbia’s position in the U.S. News ranking of National Universities with considerable interest. It has been gratifying to witness Columbia’s steady rise from 18th place, on its debut in 1988, to the lofty position of 2nd place which it attained this year, surpassed only by Princeton and tied with Harvard and MIT.

Columbia’s ascent to this pinnacle was met on campus with guarded elation. Executive Vice President for Arts & Sciences Amy Hungerford heralded the milestone in a Zoom call with faculty. The dean of undergraduate admissions and financial aid, Jessica Marinaccio, said in a statement posted on the Columbia College website, “Columbia is proud of all the factors that led U.S. News & World Report to see us as one of the best universities in the world. We have been working on every level to support our students, and are proud to be recognized for this.”

A few other top-tier universities have also improved their standings, but none has matched Columbia’s extraordinary rise. It is natural to wonder what the reason might be. Why have Columbia’s fortunes improved so dramatically? One possibility that springs to mind is the general improvement in the quality of life in New York City, and specifically the decline in crime; but this can have at best an indirect effect, since the U.S. News formula uses only figures directly related to academic merit, not quality-of-life indicators or crime rates. To see what is really happening, we need to delve into these figures in more detail.

When we do, we immediately find ourselves confronting another question. Can we be sure that the data accurately reflect the reality of life within the university? Regrettably, the answer is no. As we will see, several of the key figures supporting Columbia’s high ranking are inaccurate, dubious, or highly misleading. In what follows, we will consider these figures one by one.

In sections 2 through 5, we examine some of the numerical data on students and faculty reported by Columbia to U.S. News — undergraduate class size, percentage of faculty with terminal degrees, percentage of faculty who are full-time, and student-faculty ratio — and compare them with figures computed by other means, drawing on information made public by Columbia elsewhere. In each case, we find discrepancies, sometimes quite large, and always in Columbia’s favor, between the two sets of figures.

In section 6, we consider the financial data underpinning the U.S. News Financial Resources subscore. It is largely based on instructional expenditures, but, as we show, Columbia’s stated instructional expenditures are implausibly large and include a substantial portion of the $1.2 billion that its medical center spends annually on patient care.

Finally, in section 7, we turn to graduation rates and the other “outcome measures” which account for more than one-third of the overall U.S. News ranking. We show that Columbia’s performance on some, perhaps even most, of these measures would plunge if its many transfer students were included.

§2: CLASS SIZE

Eight percent of the U.S. News ranking is based on a measure of undergraduate class size. This measure is a weighted average of five percentage figures, namely the percentage of undergraduate classes in each of five size ranges: (a) those enrolling under 20 students, (b) those with 20‐29 students, (c) those with 30‐39 students, (d) those with 40‐49 students, and (e) those with 50 students or more. U.S. News does not reveal the weights, perhaps to discourage gaming the system, but classes in the smaller size ranges are given greater weight, so that the overall measure tends to be higher if class sizes are smaller. The thinking is that, on the whole, small class sizes should contribute to educational quality.

For some reason, U.S. News also does not disclose all of the percentage figures (a), (b), (c), (d), (e) which individual universities have reported to it, and on which its measure is based. It only discloses (a) and (e). Still, from the foregoing, it is clear that a high percentage for (a) and a low percentage for (e) will tend to produce a strong score in this category.2

According to the 2022 U.S. News ranking pages, Columbia reports (a) that 82.5% of its undergraduate classes have under 20 students, whereas (e) only 8.9% have 50 students or more.

These figures are remarkably strong, especially for an institution as big as Columbia. The 82.5% figure for classes in range (a) is particularly extraordinary. By this measure, Columbia far surpasses all of its competitors in the top 100 universities; the nearest runners-up are Chicago and Rochester, which claim 78.9% and 78.5%, respectively.3 Even beyond the top 100, a higher figure was reported by only four of all the 392 schools ranked as National Universities by U.S. News.4 The median value among all National Universities for classes in range (a) was a mere 47.9%. If the 82.5% figure were correct, then, it would attest to a uniquely intense commitment by Columbia to keeping class sizes small.

Although there is no compulsory reporting of information on class sizes to the government, the vast majority of leading universities voluntarily disclose their Fall class size figures as part of the Common Data Set initiative. Each participating institution issues its own information sheet, in an undertaking coordinated jointly by the College Board, Peterson’s, and U.S. News.

The guidelines for completing Section I-3 of the Common Data Set clearly explain how undergraduate class sizes should be counted. To be included, a class should enroll at least one undergraduate. Certain classes are excluded from consideration, such as laboratory and discussion sections associated with a lecture, reading and research courses, internships, non-credit courses, classes enrolling only one student, individual music instruction, and so on. A completed Common Data Set also provides some additional information on class sizes, which gives some insight into the U.S. News percentage figures. U.S. News confirmed to the author that its survey employs the same class size guidelines as the Common Data Set.

Columbia, however, does not issue a Common Data Set. This is highly unusual for a university of its stature. Every other Ivy League school posts a Common Data Set on its website, as do all but eight of the universities among the top 100 in the U.S. News ranking.5 (It is perhaps noteworthy that the runners-up mentioned above, Chicago and Rochester, are also among the eight that do not issue a Common Data Set.)

According to Lucy Drotning, Associate Provost in the Office of Planning and Institutional Research, Columbia prepares two Common Data Sets for internal use: one covering Columbia College and Columbia Engineering, and the other covering the School of General Studies. She added, however, that “The University does not share these.” Consequently, we know no details regarding how Columbia’s 82.5% figure was obtained.

On the other hand, there is a source, open to the public, containing extensive information about Columbia’s class sizes. Columbia makes a great deal of raw course information available online through its Directory of Classes, a comprehensive listing of all courses offered throughout the university. Besides the names of courses and instructors, class meeting times, and so on, this website states the enrollment of each course section. Course listings are taken down at the end of each semester but remain available from the Internet Archive.

Using these data, the author was able to compile a spreadsheet listing Columbia course numbers and enrollments during the semesters used in the 2022 U.S. News ranking (Fall 2019 and Fall 2020), and also during the recently concluded semester, Fall 2021. The entries in this spreadsheet are not merely a sampling of courses; they are meant to be a complete census of all courses offered during those semesters in subjects covered by Arts & Sciences and Engineering (as well as certain other courses aimed at undergraduates). With the help of this spreadsheet, an estimate of the class size figures that would appear on Columbia’s Common Data Set, if it issued one, can be independently obtained.

Most of the information needed to follow the guidelines of the Common Data Set can be gleaned from the Directory of Classes, supplemented by the online Bulletins of Columbia College and Columbia Engineering. For example, the types of classes mentioned above, which the Common Data Set sets aside, may all be recognized and excluded accordingly.

One important condition in the guidelines cannot be determined from the publicly available information, however. This is the requirement that a class have at least one undergraduate enrolled. Since the Directory of Classes does not specify which classes enroll undergraduates, we cannot independently compute precise values for Columbia’s class-size percentages.

Nevertheless, we may estimate these percentages with a high degree of confidence. Courses numbered in the ranges 1000–3000 are described by the University as being “undergraduate courses,” while those in the 4000 range are “geared toward undergraduate students” or “geared toward both undergraduate and graduate students.” It is a solid assumption, therefore, that all but a negligible number of these classes have at least one undergraduate enrolled.6 On the other hand, courses offered in the professional schools are almost never taken by undergraduates: Columbia Law School forbids undergraduates to enroll in its courses, for example, while Columbia Journalism School allows undergraduates in no more than half a dozen of its courses each semester. One may therefore confidently assume that undergraduates enroll in only a negligible number of professional-school courses.7

What remain to be considered are graduate-level courses offered in the faculties that also teach undergraduates, namely those of Arts & Sciences and of Engineering. Courses at the 9000 level, which are almost always reading or research courses, may be excluded from consideration. Among courses at the 5000, 6000, and 8000 levels, a few are explicitly described as closed to undergraduates in the Directory of Classes or in the Engineering School’s Bulletin, and these too may be excluded. The remaining graduate courses, however, which undergraduates can and do take in substantial numbers, are the cause of some genuine uncertainty.

Still, this uncertainty is not too great, because the total number of classes in this upper-level group is small compared with the total number of classes at lower levels. After the exclusions described above are made, there are 939 classes in the upper-level group and 3,798 in the lower-level group.8 Two extreme cases can be imagined: that undergraduates took no 5000-, 6000-, and 8000-level courses, or that they took all such courses (except those already excluded from consideration).9 Under each of these two extreme assumptions, the spreadsheet may be used to compute definite values for the class-size figures.

Since the reality lies somewhere between these unrealistic extremes, it is reasonable to conclude that the true value of figure (a) — namely, the percentage, among Columbia courses enrolling undergraduates, of those with under 20 students — probably lies somewhere between 62.7% and 66.9%. We can be quite confident that it is nowhere near the figure of 82.5% claimed by Columbia.

Reasoning similarly, we find that the true value of figure (e) — namely, the percentage, among Columbia courses enrolling undergraduates, of those with 50 students or more — probably lies somewhere between 10.6% and 12.4%. Again, this is significantly worse than the figure of 8.9% claimed by Columbia, though the discrepancy is not as vast as with figure (a).10

These estimated figures indicate that Columbia’s class sizes are not particularly small compared to those of its peer institutions.11 Furthermore, the year-over-year data from 2019–2021 indicate that class sizes at Columbia are steadily growing. At the moment, ironically, Columbia’s administration is considering a major expansion in undergraduate enrollments, which would increase class sizes even further.

§3: FACULTY WITH TERMINAL DEGREES

Three percent of the U.S. News ranking is based on the proportion of “Full-time faculty with Ph.D or terminal degree.” Columbia reports that 100% of its faculty satisfy this criterion, giving it a conspicuous edge over its rivals Princeton (94%), MIT (91%), Harvard (91%), and Yale (93%).

The 100% figure claimed by Columbia cannot be accurate. Among 958 members of the (full-time) Faculty of Columbia College, listed in the Columbia College Bulletin online, are included some 69 persons whose highest degree, if any, is a bachelor’s or master’s degree.12

It is not clear exactly which faculty are supposed to be counted in this calculation. The U.S. News methodology page is silent on this point. The instructions for the Common Data Set, which is co-organized by U.S. News and from which it draws much of its data, stipulate that only non-medical faculty are to be counted in collecting these figures. Information on the degrees held by faculty in Columbia’s professional schools is not readily available.

Even following the most favorable interpretation, however, by counting all faculty, both medical and non-medical — and very optimistically assuming that all faculty in the professional schools have terminal degrees — we are still unable to arrive at a figure rounding to 100%, since the 69 faculty of Columbia College without terminal degrees exceed 1% of the entire cohort of 4,381 full-time Columbia faculty in Fall 2020. If we exclude medical faculty as directed by the Common Data Set, then our calculations should be based on Columbia’s 1,602 full-time non-medical faculty in Fall 2020. We conclude that the proportion of faculty with terminal degrees can be at most (1602-69)/1602, or about 96%. To the extent that professional school faculty lack terminal degrees, this figure will be even lower.

Institutions providing a Common Data Set have to divulge, on section I-3 of the form, the subtotals of full-time non-medical faculty whose highest degrees are bachelor’s, master’s, and doctoral or other terminal degrees, and likewise for part-time faculty. This makes it possible to check (or at least replicate for non-medical faculty) the percentage stated by U.S. News. If Columbia provided a Common Data Set, like the vast majority of its peers, then the existence of substantial numbers of Columbia faculty without terminal degrees could not have been so easily overlooked.13 Columbia’s peers, which acknowledge having faculty without terminal degrees in their Common Data Sets, have been placed at a competitive disadvantage by doing so.

Most of the 69 Columbia College faculty without terminal degrees in their fields are either full-time renewable lecturers in language instruction or faculty in Columbia’s School of the Arts. Conceivably it might be claimed that, for some reason, these groups should not be counted in the calculation. Such an argument encounters significant difficulties, however. One is that without these groups, it is even harder to arrive at the student-faculty ratio of 6:1 reported by Columbia (see §5 below). More fundamentally, it simply is the case that language lecturers and Arts faculty are full-fledged faculty members. Both groups are voting members of the Faculty of Columbia College, Columbia’s flagship undergraduate school, and also of the Faculty of Arts & Sciences.14

In any case, these 69 persons include some distinguished scholars and artists, and even a winner of the Nobel Prize.15 Columbia would surely be a lesser place without them, even if 100% of its faculty really did then hold terminal degrees.

The situation highlights a weakness of the entire practice of ranking. By and large, a university where more faculty have doctorates is likely to be better than one where fewer do. Yet the pressure placed on universities by adopting this measure as a proxy for educational quality can be harmful, if it induces them to hire faculty based on their formal qualifications rather than on a more thoughtful appraisal of their merits. Almost any numerical measure of educational quality carries a similar risk. If it is given too much weight, it will distort the priorities of the institution.16

Still, the ranking is even more defective if it relies on inaccurate data, which seems to be the case here.

§4: PERCENTAGE OF FACULTY WHO ARE FULL-TIME

One percent of the U.S. News ranking is based on “Percent faculty that is full time.” Columbia reported to U.S. News that 96.5% of its (non-medical) faculty are full-time. This puts it significantly ahead of its rivals Princeton (93.8%), Harvard (94.5%), MIT (93.0%), and Yale (86.2%).

As U.S. News clearly states on its methodology page, this figure is supposed to exclude medical faculty while including almost all other faculty in the university. In particular, teaching undergraduates is not a condition for faculty to be included in this count. The breakdown reported by Columbia to U.S. News was that 1,601 faculty were full-time while 173 were part-time. (Readers checking the arithmetic might be puzzled as to why this leads to a percentage figure of 96.5%. As is customary in these matters, part-time faculty are given one-third the weight of full-time faculty. Consequently, of a college with 300 full-time and 300 part-time faculty, it would confusingly be said that 75% of its faculty were full-time, not 50% as one might expect.)

The numbers of full-time non-medical faculty and part-time non-medical faculty at each university must also be filed with the National Center for Education Statistics, a Federal agency, which makes them available to the public through its Integrated Postsecondary Education Data System (IPEDS).17 The percentage in question can therefore be checked from these numbers. For most institutions, naturally, the agreement is quite good. (One reason it might fail to be perfect is that U.S. News also excludes a few other small groups of faculty.)

In the case of Columbia, the two figures differ wildly. Columbia reported to the government that, in Fall 2020, it had 1,583 full-time non-medical faculty and 1,662 part-time non-medical faculty, which works out to 74.1% of Columbia’s non-medical faculty being full-time. This is the lowest such percentage for any university ranked in the top 100 by U.S. News.18 The difference between the percentage reported to U.S. News and the percentage implied by the government data is also far greater for Columbia than for any of these other universities.

As we have seen, the figures for full-time non-medical faculty reported to U.S. News and to the government are not much different. The striking discrepancy is between the figure of 173 part-time non-medical faculty, reported by Columbia to U.S. News, and the figure of 1,662 part-time non-medical faculty, reported by Columbia to the government. This discrepancy remains puzzling. Both figures appear to count roughly the same persons. The exclusion of a few other small groups of faculty by U.S. News (administrators with faculty status, faculty on unpaid leaves, and replacements for faculty on sabbatical leaves) seems insufficient to explain such a wide gap.

§5: STUDENT-FACULTY RATIO

Another one percent of the U.S. News ranking is based on the student-faculty ratio. Not only is the student-faculty ratio reported on the Common Data Set, but there is also mandatory reporting of this figure to the government.

Columbia reports a student-faculty ratio of 6 to 1; it has reported this exact same ratio every year since 2008, when the government began collecting this information.

Student-faculty ratios are notoriously slippery figures because of the vexed question of which students and which faculty to count. Nevertheless, U.S. News, the Common Data Set, and the government all more or less agree on a methodology for computing this ratio and provide guidelines explaining how to do it. These state that students and faculty are to be excluded from the count if, and only if, they study or teach “exclusively in stand-alone graduate or professional programs.”19 On an FAQ page, the government provides more detail about this condition: “An example of a graduate program that would not meet this criterion is a school of business that has an undergraduate and graduate program and therefore enrolls both types of students and awards degrees/certificates at both levels. Further, the faculty would teach a mix of undergraduate and graduate students.”

At Columbia, the School of Engineering and Applied Sciences enrolls both undergraduates and graduates and confers degrees at both levels. The Faculty of Arts & Sciences is nominally distinct from the schools conferring undergraduate and graduate degrees in the liberal arts — Columbia College, the School of General Studies, and the Graduate School of Arts & Sciences — yet the vast majority of courses taken by students in all three schools are those offered by the departments of the Faculty of Arts & Sciences and staffed by members of that Faculty, who teach a mix of undergraduate and graduate students. The graduate programs in Engineering and in Arts & Sciences, therefore, do not qualify as “stand-alone” programs, and their students should be included in the count.

With this understood, the calculation of the student-faculty ratio should run roughly as follows. In Fall 2020, the number of Columbia undergraduates was 8,144, the number of Engineering graduate students was 2,401, and the number of Arts & Sciences graduate students was 3,182, for a total of 13,727 students in the count. As called for by the guidelines, these figures are full-time equivalents, meaning the number of full-time students plus one-third the number of part-time students. Likewise, the number of full-time faculty in Arts & Sciences was 972, while the number in Engineering was 235, for a total of 1,207 full-time faculty in the count.

Columbia provides detailed information in its Statistical Abstract about the numbers of full-time faculty in its various schools, yet it reveals nothing at all about the numbers of part-time faculty. As we saw in §4, the overall proportion of faculty who are full-time is completely unclear, and we know even less about this proportion in the individual schools. The professional schools, with their many clinical faculty, may have a lower proportion of full-time faculty than Arts & Sciences.

If Columbia’s report to U.S. News is correct that the proportion of faculty who are full-time was about 96.5%, then we may treat the contribution of part-time faculty to this ratio as negligible. A correct student-faculty ratio for Columbia, following the stated methodology, would thus appear to be approximately 13,727/1,207, which is about 11 to 1.

If the proportion of faculty who are full-time is lower, as it appears to be from the government reporting, then we cannot simply neglect part-time faculty in the calculation, but we still do not know the proportion of full-time faculty among the faculty in Arts & Sciences and Engineering. If we make the favorable assumption that it agrees with the figure for all non-medical faculty discussed in §4, then the correct student-faculty ratio would be the ratio above multiplied by 0.741, which is somewhat more than 8 to 1.

Universities currently do not have to report any details of how they calculated their student-faculty ratios, but they did in 2008, the first year in which this figure was collected. Columbia’s report for that year to the government reveals, when compared with enrollment figures for the same year, that the ratio was computed using undergraduate enrollments only. Graduate students in both Engineering and Arts & Sciences were excluded, contrary to the guidelines.

Columbia is not the only elite university to bend the rules in this way. All of the top ten in the U.S. News ranking appear to do it, in fact. Penn used to append a remark to its government reporting stating, “This is the ratio of undergraduate students to faculty.” It continues to bend the rules but no longer acknowledges it.20 At Johns Hopkins, the reported ratio jumped abruptly from 10:1 to 7:1 in a single year, clearly indicating that the cohorts being counted had been changed in Hopkins’s favor. Still, many less prestigious universities continue to count graduate students when computing their ratios, and following the rules more scrupulously has placed them at a competitive disadvantage.

Columbia’s undergraduate enrollments have grown substantially in recent years, while faculty growth has struggled to keep pace with them. In light of this, it is not surprising that even the ratio of undergraduates to undergraduate faculty now exceeds 6 to 1, at least assuming the favorable number for full-time faculty. Using the numbers quoted above for Fall 2020, it works out to 0.965 x 8,144/1,207 = 6.51 to 1, or 7 to 1 if we round off like U.S. News.

§6: SPENDING ON INSTRUCTION

Ten percent of the U.S. News ranking is based on “Financial resources per student.” Columbia comes in 9th place in this category, notably ahead of its rival Princeton, which comes in 13th place. This may seem surprising, since Princeton’s endowment per student amounts to over $3 million, while Columbia’s endowment per student is less than one-eighth as much.21

It turns out, however, that what U.S. News means by “Financial resources” is not endowment, but rather annual spending per student. It treats this, perhaps questionably, as a proxy for academic merit. The categories of spending U.S. News considers are research, public service, instruction, academic support, student services, and institutional support. The latter three refer to certain types of administrative spending, which it seems particularly dubious to regard as a marker of educational quality.22

Columbia’s strong showing in the Financial Resources category, however, appears to be chiefly attributable to the amount it claims to spend on instruction. It reported to the government that its instructional spending in 2019–20 was slightly over $3.1 billion. This is a truly colossal amount of money. It works out to over $100,000 annually per student, graduates and undergraduates alike. It is by far the largest such figure among those filed with the government by more than 6,000 institutions of higher learning.23 It is larger than the corresponding figures for Harvard, Yale, and Princeton combined.

One is hard pressed to imagine how Columbia could spend such a immense sum on instruction alone. Faculty salaries do not come close to accounting for it. In 2019-20, full-time non-medical faculty salary outlays amounted to $289 million, which is less than 9% of the $3.1 billion instruction figure. Even if generous allowances are made for part-time and medical faculty, and all are counted purely as instructional expenses, a huge sum remains unaccounted for.24

In other settings, however, Columbia portrays its expenditures quite differently, claiming to spend less on instruction and more on other things. For example, Columbia often proudly proclaims that it spends more than $1 billion annually on research, even though in its government reporting (on which U.S. News relies for many of its figures) Columbia represents its research expenditures as far smaller, about $763 million. And in its Consolidated Financial Statements, Columbia claims to spend only about $2 billion on “Instruction and educational administration” (presumably including academic support and student services), which is over $1 billion lower than the government reports state. On the other hand, in the Consolidated Financial Statements, another very large cost is included — more than $1.2 billion for “Patient care expense” — though it is completely absent from the government reports. The bottom lines in the two accounts miraculously agree to the penny, but the individual line items differ dramatically. When the two accounts are compared, it becomes clear that much of what is construed as patient care expense in the Consolidated Financial Statements is construed as instructional expense in the government reports.

| How Columbia portrays its total expenditures for Fiscal Year 2020 | ||||

| In reporting to the U.S. Department of Education | In its Consolidated Financial Statements | |||

| Instruction | 3,126,101,000 | Instruction and educational administration | 2,061,981,000 | |

| Research | 762,719,000 | Research | 660,083,000 | |

| Public service | 238,262,000 | Patient care expense | 1,215,438,000 | |

| Academic support | 107,487,000 | Operation and maintenance of plant | 305,676,000 | |

| Student services | 185,141,000 | Institutional support | 287,176,000 | |

| Institutional support | 338,153,000 | Auxiliary enterprises | 161,313,000 | |

| Auxiliary enterprises | 279,389,000 | Depreciation | 292,769,000 | |

| Interest | 52,816,000 | |||

| Total expenses | 5,037,252,000 | Total expenses | 5,037,252,000 | |

As we have seen, Columbia benefits enormously in the U.S. News ranking from the immense amount it claims to spend on instruction. It also benefits from the amount it claims to spend on research, but to a much lesser extent. This is because U.S. News pro-rates the amounts spent on research and public service, multiplying them by the fraction of students who are undergraduates.25 Columbia has a gigantic number of graduate students — more fully-in-person graduate students than any other American university, in fact26 — and so its proportion of undergraduates is unusually small, about one-third. Consequently, a research dollar is worth only one-third as much to Columbia’s position in the ranking as an instruction dollar. What is most advantageous to Columbia’s position in the ranking is to construe its spending on other things, such as patient care, chiefly as spending on instruction, not on research or anything else. This is exactly what it has done.27

Of course, these balance sheets may be expected to conform to different accounting specifications,28 and they are intended to satisfy the technical requirements of auditors, not to inform the public. Still, it is difficult to avoid the conclusion that substantial expenditures, construed as patient care in one setting, are construed as instructional costs in another, when this works to Columbia’s advantage in the U.S. News ranking.

A cynic might suggest that all universities do the same, but this is not so. New York University, for example, reports in its Consolidated Financial Statements for fiscal year 2020 that $1.96 billion was spent on “Instruction and Other Academic Programs,” including $1.34 billion on salary and fringe, while $6.77 billion was spent on “Patient Care”; meanwhile, in its government reporting for the same year, it reports that $1.79 billion was spent on “Instruction.” At NYU, clearly, patient care has not been subsumed into instruction, as seems to be the case at Columbia. U.S. News ranks NYU in 29th place for Financial Resources and in 28th place overall.

How, then, have Columbia’s expenditures on patient care or research been construed instead as expenditures on instruction? This is difficult to answer from the available data. What can be observed is that, in the single year between 2007 and 2008, reported spending on instruction rose by over half a billion dollars, reaching 58% of total expenditures — not far below the proportions that have been reported since — while, at the same time, reported spending on “independent operations” fell from $384 million to zero, where it has since remained. The government defines “independent operations” as “Expenses associated with operations that are independent of or unrelated to the primary missions of the institution (i.e., instruction, research, public service).” Apparently, Columbia decided in 2008 that all of its expenses for independent operations were better classified as expenses for instruction. Without knowing what these expenses were, we cannot judge the propriety of this decision.29

More recently, the way that Columbia describes the function of its full-time faculty in its government reporting has notably changed. Until academic year 2016-17, the function of all full-time faculty (then comprising some 3,848 persons) was described as “Instruction/research/public service.” Since that time, however, their function has instead been described as “Primarily Instruction.” This includes over 2,000 professors at Columbia’s medical school, who devote much of their time to research and patient care, and who might be surprised to learn that their primary function is the training of Columbia’s medical students, whom they substantially outnumber.30

It seems clear that the unparalleled amount that Columbia claims to spend on instruction does not reflect any unparalleled features of the undergraduate education it offers. Rather, it appears to be a consequence of various distinctive bookkeeping techniques.

§7: GRADUATION RATES AND OTHER “OUTCOME MEASURES”

A large portion of the U.S. News ranking — 35 percent in all — is based on retention and graduation rates, which are measured in several different ways. U.S. News emphasizes these factors more heavily than it once did.31 In the past, U.S. News was harshly criticized for its emphasis on the quality of a university’s intake, especially the selectivity of admissions. This, it was argued, was overly favorable to wealthy, elite institutions, while also driving those institutions to become excessively selective. Perhaps in response, U.S. News eliminated the weight given to selectivity, while increasing the weight given to “outcomes.” These include not only retention and graduation rates, but also student debt, on which a further 5 percent of the ranking is based.32

Columbia’s reported performance on these outcome measures is nothing short of extraordinary. In the overall “Outcomes Rank,” it comes in third place, surpassed only by Princeton and Harvard. In the “Graduation and Retention Rank,” it is tied for first place with Princeton. Columbia’s high performance on these subscores, clearly, has played a crucial role in its ascent in the overall ranking. Columbia’s administration acknowledges this, noting: “Columbia had been ranked third from 2019 to 2021; the upward move for 2022 can be attributed in part to its strong graduation rates.” (Columbia might well tie Princeton and Harvard in the Outcomes Rank too, except that it reports a higher student debt burden, doubtless related to its smaller endowment.)

Among the figures that go into these subscores, the most heavily weighted are graduation rates. U.S. News tells us that (a) 96% of Columbia undergraduates graduate in six years or less, and that (b) the same is true of 95% of undergraduates who held a Pell grant (a government subsidy for needy students) and (c) of 97% of those who did not. Fully 22.6% of the weight in the entire U.S. News ranking rests on these three figures alone. Clearly, U.S. News regards graduation rates as highly representative of the overall quality of a university.

One might be tempted to infer that Columbia is structurally similar to Harvard, Yale, and Princeton and has succeeded in competing with them on their own terms. This is far from being true, however. Columbia is profoundly different from its rivals in that it enrolls an enormous number of transfer students, who are not included in the figures above.

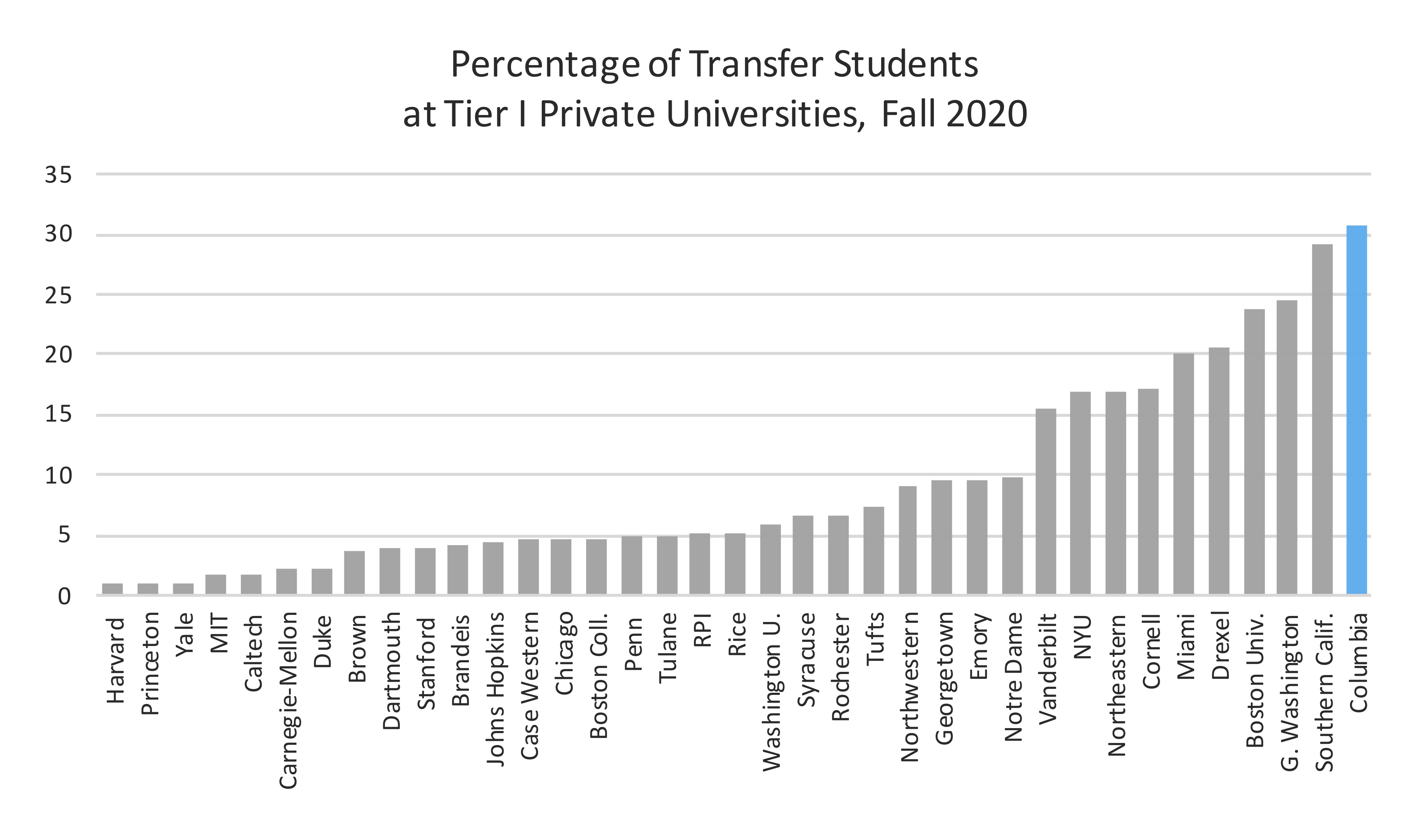

Columbia reported to the government that in Fall 2020, over 30% of its incoming undergraduates were transfer students. This is a larger proportion than at any other Tier I private university (see chart).33 Transfer students at Columbia are mostly enrolled in the School of General Studies, where more than 75% of all students arrive with some transfer credit, but the School of Engineering’s 3-2 Combined Plan may also contribute significant numbers of transfer students.

There is nothing inherently wrong with having many transfer students. Some leading public universities, including several in the University of California system, have a higher proportion of transfer students than Columbia. Their transfer students largely come from two-year community colleges in the same state, providing a route to a bachelor’s degree for students at these colleges. This pathway enjoys broad support as an avenue to social and economic advancement for disadvantaged groups.

A disturbing feature of the situation at Columbia, however, is that transfer students fare significantly less well than other undergraduates. This is revealed by their six-year graduation rate, a common measure of student outcomes (and one of the factors considered by U.S. News). Of the non-transfer students who matriculated in 2012-13, fully 96% graduated in six years; but of the transfer students, only 85% graduated during that same period. As this indicates, there is clearly considerable attrition among transfer students.34 Such a pattern is unusual for elite universities with a large transfer population: at the University of California schools, for example, transfer students have higher graduation rates than non-transfers.35 Transfer students at Columbia are far more likely to receive Pell grants than non-transfer students, suggesting that financial hardship may be part of the problem.36

Financial aid at Columbia tends to be less generous for transfer students, because they usually enroll in the Combined Plan or in the School of General Studies. The website of Columbia College and Columbia Engineering conspicuously states that Columbia’s financial aid meets 100% of demonstrated need. On the other hand, the website of the Combined Plan emphasizes that it does not promise to meet demonstrated financial need and, furthermore, that it guarantees on-campus housing for one year only. Likewise, the General Studies website does not promise to meet 100% of demonstrated need, stipulating instead that “Scholarships are awarded to students with demonstrated financial need and are influenced by academic achievement.”37 The disparity between the financial aid packages offered by Columbia College and Columbia Engineering, on the one hand, and by General Studies, on the other, is quite dramatic. Readers may verify this for themselves by entering the same income figures into the College Board’s Net Price Calculator for Columbia College/Columbia Engineering and for General Studies.38

Further statistical information on transfer students at Columbia is hard to come by. The data reported to the government mostly exclude transfer students, unfortunately. The graduation rate and the number of Pell grants, both mentioned above, are rare exceptions. The government’s methodology excludes transfer students from the data on such important matters as test scores, selectivity, yield, and first-year retention rates. U.S. News excludes them from its graduation rates and student debt figures as well. Consequently, transfer students, who comprise a sizable fraction of all Columbia undergraduates, hardly even exist as far as the U.S. News ranking is concerned.

The situation here differs from those discussed in earlier sections. In each previous section, what was at issue was a discrepancy between two figures, both obtained from data provided by Columbia. Regarding class sizes, the information provided to U.S. News conflicts with the information in the Directory of Classes. Regarding terminal degrees, the information provided to U.S. News conflicts with the information in the Columbia College Bulletin. Regarding full-time faculty, the information provided to U.S. News conflicts with the information provided to the Department of Education. And so on.

On the other hand, regarding “outcome measures” like graduation rates, retention rates, and student debt, there is no evidence of any inaccuracy in the data provided by Columbia to the government, on which U.S. News evidently relies. The U.S. News graduation rate figures for all schools, including Columbia, can be accurately reproduced from the government data,39 using U.S. News’s stated methodology, which excludes transfer students. The problem is that outcomes for transfer students do not show up in the data or the ranking at all. This creates incentives for a university like Columbia to seek superlative outcomes for non-transfers, while displaying less concern for transfers, who may be treated more as a source of revenue.

We have no information on the test scores or first-year retention rates of transfer students, and we can only speculate as to how these parameters (accounting for 5% and 4.4%, respectively, of the U.S. News ranking) would change if they were included.40 We can, however, get a sense of how the inclusion of transfers would affect six-year graduation rates, by examining the figures reported to the government for the 2012-13 entering cohort, the last year for which data on transfers are as yet available.

In this context, we return to the graduation rates referred to as (a), (b), (c) earlier in this section. (a): Using the figures for the 2012-13 cohort, we find that Columbia’s six-year graduation rate, if transfers are excluded, would be 96%, matching the figure in U.S. News (which uses a larger cohort). Yet if transfers are included, this percentage drops to 92%. This may not seem like a big drop, but it matters a lot for the ranking. Using the first figure, Columbia is in 6th place, surpassed only by Harvard, Notre Dame, Princeton, Yale, and Duke. Using the second, it slips all the way to 26th place.41 (b): Likewise, for students holding a Pell grant, the six-year graduation rate for the 2012-13 cohort is 93% excluding transfers, close to the U.S. News figure; but it falls to 83% if transfers are included. (c): Finally, for those not holding a Pell grant, the six-year graduation rate for the 2012-13 cohort is 96% excluding transfers, again close to the U.S. News figure; but it again falls to 94% if transfers are included.42

Since these figures, as mentioned above, account for fully 22.6% of the overall U.S. News ranking, there is a strong chance that Columbia’s position in this ranking would tumble if transfer students were included.

The picture coming into focus is that of a two-tier university, which educates, side by side in the same classrooms, two large and quite distinct groups of undergraduates: non-transfer students and transfer students. The former students lead privileged lives: they are very selectively chosen, boast top-notch test scores, tend to hail from the wealthier ranks of society, receive ample financial aid, and turn out very successfully as measured by graduation rates. The latter students are significantly worse off: they are less selectively chosen, typically have lower test scores (one surmises, although acceptance rates and average test scores for the Combined Plan and General Studies are well-kept secrets),43 tend to come from less prosperous backgrounds (as their higher rate of Pell grants shows), receive much stingier financial aid, and have considerably more difficulty graduating.

No one would design a university this way, but it has been the status quo at Columbia for years. The situation is tolerated only because it is not widely understood. The U.S. News ranking, by effacing transfer students from its statistical portrait of the university, bears some responsibility for this. The ranking sustains a perception of the university that is at odds with reality.

§8: CONCLUSION

No one should try to reform or rehabilitate the ranking. It is irredeemable. In Colin Diver’s memorable formulation, “Trying to rank institutions of higher education is a little like trying to rank religions or philosophies. The entire enterprise is flawed, not only in detail but also in conception.”

Students are poorly served by rankings. To be sure, they need information when applying to colleges, but rankings provide the wrong information. As many critics have observed, every student has distinctive needs, and what universities offer is far too complex to be projected to a single parameter. These observations may partly reflect the view that the goal of education should be self-discovery and self-fashioning as much as vocational training. Even those who dismiss this view as airy and impractical, however, must acknowledge that any ranking is a composite of factors, not all of which pertain to everyone. A prospective engineering student who chooses the 46th-ranked school over the 47th, for example, would be making a mistake if the advantage of the 46th school is its smaller average class sizes. For small average class sizes are typically the result of offering more upper-level courses in the arts and humanities, which our engineering student likely will not take at all.

College applicants are much better advised to rely on government websites like College Navigator and College Scorecard, which compare specific aspects of specific schools. A broad categorization of institutions, like the Carnegie Classification, may also be helpful — for it is perfectly true that some colleges are simply in a different league from others — but this is a far cry from a linear ranking. Still, it is hard to deny, and sometimes hard to resist, the visceral appeal of the ranking. Its allure is due partly to a semblance of authority, and partly to its spurious simplicity.

Perhaps even worse than the influence of the ranking on students is its influence on universities themselves. Almost any numerical standard, no matter how closely related to academic merit, becomes a malignant force as soon as universities know that it is the standard. A proxy for merit, rather than merit itself, becomes the goal.

When U.S. News emphasized selectivity, the elite universities were drawn into a selectivity arms race and drove their acceptance rates down to absurdly low levels. Now it emphasizes graduation rates instead, and it is not hard to foresee that these same universities will graduate more and more students whose records do not warrant it, just to keep graduation rates high. For the same reason, they will reject applicants who seem erratic, no matter how brilliant, in favor of those who are reliable, no matter how dull. We have seen how, as transfer students have remained invisible in the ranking, Columbia has fallen into the habit of accepting more and more transfer students and offering them inferior financial aid. Cause and effect are difficult to prove but easy to imagine.

In the same vein, notice that seven percent of the U.S. News ranking is based on “Faculty compensation.” Well and good: it stands to reason that those universities which pay faculty more are likely, on the whole, to be better schools. Yet a close look at the methodology reveals that U.S. News counts only the average pay of ladder-track faculty. This creates an obvious incentive to divide the faculty in two, enriching a small number of well-paid ladder-track faculty while stiffing a large number of poorly-paid lecturers and adjuncts.

Even on its own terms, the ranking is a failure because the supposed facts on which it is based cannot be trusted. Eighty percent of the U.S. News ranking of a university is based on information reported by the university itself. This information is detailed and subtle, and the vetting conducted by U.S. News is cursory enough to allow many inaccuracies to slip through. Institutions are under intense pressure to present themselves in the most favorable light. This creates a profound conflict of interest, which it would be naive to overlook.

Faculty and other interested parties cannot afford just to ignore the ranking, much as it deserves to be ignored. We need to be aware of its role in creating and sustaining a system that is in many ways indefensible.

The role played by Columbia itself in this drama is troubling and strange. In some ways its conduct seems typical of an elite institution with a strong interest in crafting a positive image from the data that it collects. Its choice to count undergraduates only, contrary to the guidelines, when computing student-faculty ratios is an example of this. Many other institutions appear to do the same. Yet in other ways Columbia seems atypical, and indeed extreme, either in its actual features or in those that it dubiously claims. Examples of the former include its extremely high proportion of undergraduate transfer students, and its enormous number of graduate students overall; examples of the latter include its claim that 82.5% of undergraduate classes have under 20 students, and its claim that it spends more on instruction than Harvard, Yale, and Princeton put together.

All in all, we have seen that 13% of the U.S. News ranking is based on reported figures that conflict with data released elsewhere by Columbia; that another 10% rests on questionable assertions about the financial resources Columbia devotes to its students; and that 22.6% is based on graduation rates that would surely fall if Columbia’s transfer students were counted. A further 14.4% is based on other factors (first-year retention, test scores, and student debt) that also do not count transfer students and that might well be lower if they did, although firm evidence of this is lacking. Yet another 20% comes from the “peer assessment survey” reflecting Columbia’s reputation with administrators at other schools — but, as Malcolm Gladwell points out, they may rely for their information largely on the previous year’s ranking, creating an echo chamber in which the biases and inaccuracies of the ranking are only amplified.

There remains a final 20%, based on factors that have not been investigated here: faculty compensation, alumni giving, and so on. It would be harder to explore these factors, because no data from other sources seem to be readily available. It is striking that, in every case where the data reported by Columbia to U.S. News could be checked against another source, substantial discrepancies were revealed.

There should be vigorous demands for changes, but changes will be hard to make. The incentives for bad behavior will remain as strong as ever. Columbia has come to depend, for example, on transfer students as a source of tuition revenue. Furthermore, a culture of secrecy, a top-down management structure, and atrophied instruments of governance at Columbia have hamstrung informed debate and policymaking.

It would not be adequate, therefore, to address the accuracy of the facts underpinning Columbia’s ranking in isolation. Root-and-branch reform is needed. Columbia should make a far greater commitment to transparency on many fronts, including budget, staffing, admissions, and financial aid. Faculty should insist on thorough oversight in all these matters, and on full participation in decision-making about them. The positions they arrive at should be shared and debated with trustees, students, alumni, and the public.

In 2003, when Columbia was ranked in 10th place by U.S. News, its president, Lee Bollinger, told the New York Times, “Rankings give a false sense of the world and an inauthentic view of what a college education really is.” These words ring true today. Even as Columbia has soared to 2nd place in the ranking, there is reason for concern that its ascendancy may largely be founded, not on an authentic presentation of the university’s strengths, but on a web of illusions.

It does not have to be this way. Columbia is a great university and, based on its legitimate merits, should attract students comparable to the best anywhere. By obsessively pursuing a ranking, however, it demeans itself. The sooner it changes course, the better.

Generally, statements about the U.S. News rankings of specific schools, as well as figures reported by U.S. News about these schools, are drawn from the ranking pages of the individual schools, linked from the index page, Best National University Rankings, on the U.S. News website. Most of the figures discussed herein are under the tabs labeled Rankings, Academics, and Admission & Financial Aid, on the ranking pages. Many of these figures, however, are visible only to paid subscribers to U.S. News College Compass.

Assertions about the methodology followed by U.S. News are based on its two methodology pages, How U.S. News Calculated the 2022 Best Colleges Rankings and A More Detailed Look at the Ranking Factors.

Statements about government reporting by colleges and universities refer to the mandatory reports filed annually by these institutions with the National Center for Education Statistics, an agency of the U.S. Department of Education, and distributed to the public via the Integrated Postsecondary Education Data System (IPEDS). Data reported by individual institutions may be viewed using the Reported Data tab on Look Up An Institution. Comparative data encompassing several institutions may be obtained from Compare Institutions; after institution names, reporting years, and variable names are entered, the user may download a spreadsheet in comma-separated format with the data requested. In what follows, a comparative IPEDS search is documented by linking to an Excel spreadsheet generated in this fashion; the reporting year is 2020 (the most recent one available) unless otherwise stated. When performing IPEDS searches, a list of the UnitID numbers of the universities ranked by U.S. News in the top 100 National Universities is useful.

The most obviously relevant category is “Hospital services,” for which the IPEDS instructions refer to an advisory stating, “This category includes...expenses for direct patient care such as prevention, diagnosis, treatment, and rehabilitation.” The University of Pennsylvania reported expenditures of $6.8 billion in this category in fiscal year 2020, while Columbia reported zero. The reason for this difference appears to be that the instructions call for institutions to “Enter all expenses associated with the operation of a hospital reported as a component of an institution of higher education.” Several hospitals in the Philadelphia area are owned and operated by Penn, whereas the hospitals and clinics where Columbia doctors provide care, such as NewYork-Presbyterian on Columbia’s medical campus, are merely affiliated with Columbia, not part of it. However important this distinction may be from an accounting standpoint, it clearly has no bearing on the quantity or quality of instruction offered by these universities.

As mentioned in a previous footnote, some other universities, such as Yale and Wake Forest, appear to report patient care expenditures in the category “Academic support.” This is just as valuable to their U.S. News ranking as Columbia’s choice of “Instruction.” The justification here appears to be that the instructions say, “Include expenses for medical, veterinary and dental clinics if their primary purpose is to support the institutional program, that is, they are not part of a hospital.” But what appears to be meant by this is clinics whose primary purpose is instruction, for as the IPEDS glossary puts it, “Academic support” should include “a demonstration school associated with a college of education or veterinary and dental clinics if their primary purpose is to support the instructional program.”

The reality is that none of these categories seems well suited to the patient care expenditures of a university with a large medical center including thousands of clinical professors working in affiliated hospitals. Few universities choose the category “Other Functional Expenses” to describe these costs, but to a lay observer, it seems to fit better than the others, even though it carries no benefit in the U.S. News ranking. ↩